Elements of Statistical Learning

Solutions to the Exercises

Yu Zhang, sjtuzy@gmail.com

November 25, 2009

Exercise 2.6 Consider a regression problem with inputs x

i

and outputs y

i

,

and a parameterized model f

θ

(x) to be fit by least squares. Show that if

there are observations with tied or identical values of x, then the fit can be

obtained from a reduced weighted least squares problem.

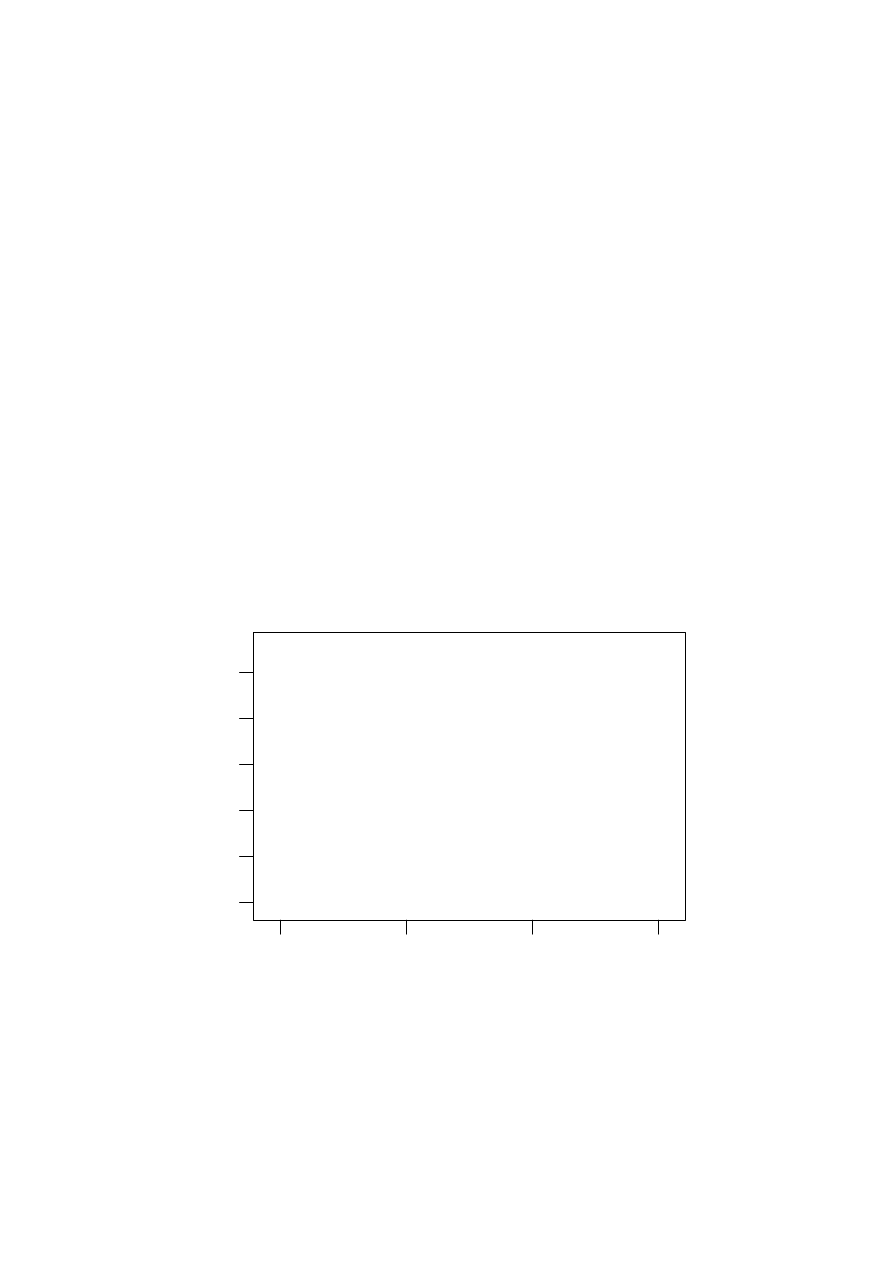

Proof For known heteroskedasticity (e.g., grouped data with known group

sizes), use weighted least squares (WLS) to obtain efficient unbiased esti-

mates. Fig.1 explains “Observations with tied or identical values of x”. In

•

•

•

•

•

•

•

•

•

•

•

•

10

15

20

25

2

3

4

5

6

7

x

y

WLS.01

Figure 1: Observations with tied

the textbook, section 2.7.1 also explain this problem.

1

If there are multiple observation pairs x

i

, y

il

, l = 1, 2 . . . , N

i

at each value

of x

i

, the risk is limited as follows:

argmin

θ

∑

i

N

i

∑

l=1

(f

θ

(x

i

)

− y

il

)

2

=

argmin

θ

∑

i

(N

i

f

θ

(x

i

)

2

− 2

N

i

∑

l

f

θ

(x

i

)y

il

+

N

i

∑

l

y

2

il

)

=

argmin

θ

∑

i

N

i

{(f

θ

(x

i

)

− y

i

)

2

+ Constant

}

=

argmin

θ

∑

i

N

i

{(f

θ

(x

i

)

− y

i

)

2

}

(1)

which is a weighted least squares problem.

Exercise 3.19 Show that

|| ˆβ

ridge

|| increases as its tuning parameter λ → 0.

Does the same property hold for the lasso and partial least squares esti-

mates? For the latter, consider the “tuning parameter” to be the successive

steps in the algorithm.

Proof Let λ

2

< λ

1

and β

1

, β

2

be the optimal solution. We denote the loss

function as follows

f

λ

(β) =

||Y − Xβ|| + λ||β||

2

2

Then we have

f

λ

1

(β

2

) + f

λ

2

(β

1

)

≥ f

λ

2

(β

2

) + f

λ

1

(β

1

)

λ

1

||β

2

||

2

2

+ λ

2

||β

1

||

2

2

≥ λ

2

||β

2

||

2

2

+ λ

1

||β

1

||

2

(λ

1

− λ

2

)

||β

2

||

2

2

≥ (λ

1

− λ

2

)

||β

1

||

2

2

||β

2

||

2

2

≥ ||β

1

||

2

2

So

|| ˆβ

ridge

|| increases as its tuning parameter λ → 0.

Similarly, in lasso case,

|| ˆβ||

1

increase as λ

→ 0. But this can’t guarantee

the l

2

-norm increase. Fig.2 is a direct view of this property.

In partial least square case, it can be shown that the PLS algorithm

is equivalent to the conjugate gradient method. This is a procedure that

iteratively computes approximate solutions of

|βA = b| by minimizing the

quadratic function

1

2

β

⊤

Aβ

− b

⊤

β

along directions that are

|A|-orthogonal (Ex.3.18). The approximate solu-

tion obtained after m steps is equal to the PLS estimator obtained after p

iterations. The canonical algorithm can be written as follows:

2

Figure 2: Lasso

1. Initialization β

0

= 0, d

0

= r

0

= b

− Aβ

0

= b

2. a

i

=

d

H

i

r

i

d

H

i

Ad

i

3. β

i+1

= β

i

+ a

i

d

i

4. r

i+1

= b

− Aβ

i+1

(= r

i

− a

i

Ad

i

)

5. b

i

=

−

r

H

i+1

Ad

i

d

H

i

Ad

i

6. d

i+1

= r

i+1

+ b

i

d

i

The squared norm of β

j+1

can be written as

||β

j+1

||

2

=

||x

j

||

2

+ 2a

2

j

||d

j

||

2

+ a

j

d

H

j

β

j

(2)

We need only shown that a

j

d

H

j

β

j

> 0.

∵ r

H

j+1

d

j+1

= r

H

j+1

r

j+1

+ b

j

r

H

j+1

d

j

and

⟨r

j+1

, d

j

⟩ = 0

(3)

∴ a

j

=

d

H

j

r

j

d

H

j

Ad

j

=

||r

j

||

2

||d

j

||

2

A

> 0(A is positive definite.)

(4)

∵ β

j

=

j

∑

i=0

a

i

d

i

(5)

∴ d

H

j

β

j

=

j

∑

i=0

a

i

d

H

j

d

i

(6)

3

Now we need to show that d

H

j

d

i

> 0, i

̸= j. By Step 6, we have d

j

=

r

j

+

∑

j

−1

i=0

(

∏

j

−1

k=i

b

k

)r

i

.

∵ ⟨r

i

, r

j

⟩ = 0, i ̸= j

(7)

∴ b

k

> 0

→ d

H

j

d

i

> 0

(8)

∵ Ad

i

= a

−1

i

(r

i

− r

i+1

)

(9)

∴ b

j

=

−

r

H

j+1

(r

j

− r

j+1

)

a

j

||d

j

||

2

A

=

||r

j+1

||

2

a

j

||d

j

||

2

A

> 0

(10)

So in PLS case, the

||β||

2

2

increase with m.

Exercise 4.1 Show how to solve the generalized eigenvalue problem max a

⊤

Ba

subject to a

⊤

W a = 1 by transforming to a standard eigenvalue problem.

Proof Hence W is the the common covariance matrix, we have W is semi-

positive definite. WLOG W is positive definite, we have

W = P

2

(11)

Let b = P a, then the problem is

a

⊤

Ba = b

⊤

P

−1

BP

−1

b = b

⊤

B

∗

b

(12)

subject to a

⊤

W a = 1 = b

⊤

B

∗

b = 1. Now the problem transform to a

standard eigenvalue problem.

Exercise 4.2 Suppose we have features x

∈ R

p

, a two-class response, with

class sizes N

1

, N

2

, and the target coded as

−N/N

1

, N/N

2

.

(d) Show that this result holds for any (distinct) coding of the two classes.

W.L.O.G any distinct coding y

′

1

, y

′

2

. Let ˆ

β = (β, β

0

)

⊤

. Compute the

partial deviation of the RSS( ˆ

β) we have

∂RSS( ˆ

β)

∂β

=

−2

N

∑

i=1

(y

i

− β

0

− β

⊤

x

i

)x

⊤

i

= 0

(13)

∂RSS( ˆ

β)

∂β

0

=

−2

N

∑

i=1

(y

i

− β

0

− β

⊤

x

i

) = 0

(14)

It yields that

β

⊤

∑

i

(x

i

− ¯

x

i

)x

⊤

i

=

∑

i

y

i

x

i

(15)

β

0

=

1

N

∑

i

(y

i

− β

⊤

x

i

)

(16)

4

Than we get

β

⊤

∑

i

(x

i

− ¯

x

i

)x

⊤

i

=

∑

i

(y

i

−

1

N

∑

i

y

i

)x

⊤

i

(17)

=

N

1

y

′

1

ˆ

µ

1

+ N

2

y

′

2

ˆ

µ

2

−

N

1

y

′

1

+ N

2

y

′

2

N

1

+ N

2

(N

1

ˆ

µ

1

+ N

2

ˆ

µ

2

)

(18)

=

1

N

(N

1

N

2

y

′

2

(ˆ

µ

2

− ˆµ

1

)

− N

1

N

2

y

′

1

(ˆ

µ

2

− ˆµ

1

))

(19)

=

N

1

N

2

N

(y

′

2

− y

′

1

)(ˆ

µ

2

− ˆµ

1

)

(20)

Hence the proof in (c) still holds.

Exercise 4.3 Suppose we transform the original predictors X to ˆ

Y via

linear regression. In detail, let ˆ

Y = X(X

⊤

X)

−1

X

⊤

Y = X ˆ

B, where Y is

the indicator response matrix. Similarly for any input x

∈ R

p

, we get a

transformed vector ˆ

y = ˆ

B

⊤

x

∈ R

K

. Show that LDA using ˆ

Y is identical to

LDA in the original space.

Proof Prof.Zhang had given the solution about this problem, but only tow

student notice this problem is tricky.

Here I give the main part of the

solution.

• First y

1

, . . . , y

K

must independent, then we have r(Σ

y

) = K.

• Assume that ˆ

B

p

∗K

, r( ˆ

B) = K < p, r(Σ

x

) = p. Note that

r( ˆ

B( ˆ

B

⊤

Σ

x

ˆ

B)

−1

ˆ

B

⊤

) < r( ˆ

B) < r(Σ

x

)

. Since ˆ

B( ˆ

B

⊤

Σ

x

ˆ

B)

−1

ˆ

B

⊤

̸= Σ

−1

x

when dim(Y ) < dim(X).

• This problem are equal to prove that ˆ

B( ˆ

B

⊤

Σ

x

ˆ

B)

−1

ˆ

B

⊤

(µ

x

k

− µ

x

l

) =

Σ

−1

x

(µ

x

k

− µ

x

l

). Let P = Σ

x

ˆ

B( ˆ

B

⊤

Σ

x

ˆ

B)

−1

ˆ

B

⊤

, we have

P

2

= P

(21)

Hence P is projection matrix. Note that Y =

{y

1

, y

2

. . . , y

K

} is indi-

5

cator response matrix, we have

µ

x

k

=

1

N

k

X

⊤

y

k

Σ

x

=

K

∑

k=1

∑

g

i

=k

(x

i

− µ

k

i

)(x

i

− µ

k

i

)

⊤

/(N

− K)

=

1

N

− K

(

K

∑

k=1

∑

g

i

=k

x

i

x

⊤

i

−

K

∑

k=1

N

k

µ

x

k

(µ

x

k

)

⊤

)

=

1

N

− K

(X

⊤

X

−

K

∑

k=1

X

⊤

y

k

y

⊤

k

)

=

1

N

− K

(X

⊤

X

− X

⊤

Y Y

⊤

X)

Note that

P (N

1

µ

x

1

, N

2

µ

x

2

. . . , N

K

µ

x

K

) = P X

⊤

Y

(22)

So we need only to shown that P X

⊤

Y = X

⊤

Y .

P X

⊤

Y = Σ

x

B(B

⊤

Σ

x

B)B

⊤

X

⊤

Y

By the definition of B, we have

B

⊤

X

⊤

Y

=

Y

⊤

X(X

⊤

X)

−1

X

⊤

Y

Σ

x

B

=

1

N

− K

((X

⊤

X)(X

⊤

X)

−1

X

⊤

Y

− X

⊤

Y Y

⊤

X(X

⊤

X)

−1

X

⊤

Y )

=

1

N

− K

X

⊤

Y (I

− Y

⊤

X(X

⊤

X)

−1

X

⊤

Y )

(B

⊤

Σ

x

B)

−1

=

(N

− K)(Y

⊤

X(X

⊤

X)

−1

(X

⊤

X

− X

⊤

Y Y

⊤

X)(X

⊤

X)

−1

X

⊤

Y )

−1

=

(N

− K)(Y

⊤

X(X

⊤

X)

−1

X

⊤

Y [I

− Y

⊤

X(X

⊤

X)

−1

X

⊤

Y ])

−1

Let Q = Y

⊤

X(X

⊤

X)

−1

X

⊤

Y we have

B

⊤

X

⊤

Y

=

Q

(23)

Σ

x

B

=

1

N

− K

X

⊤

Y (I

− Q)

(24)

(B

⊤

Σ

x

B)

−1

=

(N

− K)(Q(I − Q))

−1

(25)

Hence Q(I

− Q) is invertible matrix, we have

K = r(Q(Q

− I)) ≤ r(Q) ⇒ r(Q) = K

(26)

So Q is invertible matrix, then it yields

(B

⊤

Σ

x

B)

−1

=

(N

− K)(I − Q)

−1

Q

−1

(27)

6

Combine with (16),(17),(20), we have

P X

⊤

Y

=

1

N

− K

X

⊤

Y (I

− Q)(N − K)(I − Q)

−1

Q

−1

Q (28)

=

X

⊤

Y

(29)

Since we have ˆ

B( ˆ

B

⊤

Σ

x

ˆ

B)

−1

ˆ

B

⊤

(µ

x

k

− µ

x

l

) = Σ

−1

x

(µ

x

k

− µ

x

l

).

7

Wyszukiwarka

Podobne podstrony:

więcej podobnych podstron