Differential Equations for Reliability,

Maintainability, and Availability

Harry A. Watson, Jr.

September 1, 2005

Abstract

This is an electronic textbook on Differential Equations

for Reliability, Maintainability, and Availability.

It is written to fill the need of an introductory book

which can be accessed on-line, stored in magnetic media

or on CDs (Compact Disks), and cross-referenced electronically.

This entire book was done in

the (public domain) typesetting language L

A

TEX.

The equations, figures, pictures, and tables were

typeset in their respective L

A

TEX environments.

Many thanks are due to those who assisted in the

proofreading and problem sets: Eric Gentile (electrical engineer

and physicist) and Ron Shortt (physicist).

Because computer

software is rapidly replacing rote manipulations and

because modern numerical techniques allow qualitative

real-time graphics, the traditional introductory course

in ordinary differential equations is doomed to oblivion.

However, many textbooks in physics, chemistry, engineering,

medicine, biology, and ecology mention techniques, terms,

and processes covered nowhere else. Advanced courses on

dynamical systems, algebraic topology, and functional analysis

never mention such items as “exact equations,” “integrating

factors,” or the Clairaut

equation. Advanced modern algebra treats differential forms

and tensors in a totally different manner than is done

either in physics or in engineering.

Without a reference in introductory differential equations,

one could never span the disciplines. One

needs practice in modern transform methods in general and

in the Laplace transform in particular.

This practice should be done

at the introductory level and not in

functional analysis, where such topics are introduced

in an abstract manner with much lemmata and with no

correlation to physical reality.

Finally, in this book, we end each proof with the

hypocycloid of four cusps—the diamond symbol (♦).

2

Limit of Liability/Disclaimer of Warranty:

While every effort has been made to ensure the correctness of

this material, the author makes no warranty, expressed or

implied, or assumes any legal liability or responsibility

for the accuracy, completeness, or usefulness of any

information, process, or procedure disclosed herein. In no

event will the author be liable for any loss of profit or any

other commercial damage, including but not limited to

special, incidental, consequential, or other damages.

Copyright c

1997, Harry A.

Watson, Jr. This book may be used by NWAD (Naval Warfare

Assessment Division) and its sponsors freely without

payment of any royalty and any part may be copied freely,

except that no alteration is allowed when the copyright

symbol ( c

) is displayed.

Contents

1

Introduction

5

1.1

First Encounters

. . . . . . . . . . . . . . . . . . . . . . . . .

5

1.2

Basic Terminology

. . . . . . . . . . . . . . . . . . . . . . . .

9

1.3

Solutions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

11

1.4

Computers and Differential Equations . . . . . . . . . . . . . .

19

1.5

Euler’s Method . . . . . . . . . . . . . . . . . . . . . . . . . .

22

1.6

The equation y

0

= F (x) . . . . . . . . . . . . . . . . . . . . . .

27

1.7

Existence theorems . . . . . . . . . . . . . . . . . . . . . . . .

33

1.8

Systems of Equations . . . . . . . . . . . . . . . . . . . . . . .

42

1.9

The General Solution . . . . . . . . . . . . . . . . . . . . . . .

45

1.10 The Primitive . . . . . . . . . . . . . . . . . . . . . . . . . . .

48

1.11 Summary

. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

51

2

First Order, First Degree Equations

61

2.1

Differential Form Versus Standard Form . . . . . . . . . . . .

61

2.2

Exact Equations

. . . . . . . . . . . . . . . . . . . . . . . . .

65

2.3

Separable Variables . . . . . . . . . . . . . . . . . . . . . . . .

71

2.4

First Order Homogeneous Equations

. . . . . . . . . . . . . .

81

2.5

A Theorem on Exactness . . . . . . . . . . . . . . . . . . . . .

88

2.6

About Integrating Factors . . . . . . . . . . . . . . . . . . . .

96

2.7

The First Order Linear Differential Equation . . . . . . . . . . 100

2.8

Other Methods . . . . . . . . . . . . . . . . . . . . . . . . . . 105

2.9

Summary

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

3

The Laplace Transform

110

3.1

Laplace Transform Preliminaries . . . . . . . . . . . . . . . . . 110

3.2

Basic Theorems . . . . . . . . . . . . . . . . . . . . . . . . . . 114

3.3

The Inverse Transform . . . . . . . . . . . . . . . . . . . . . . 123

1

3.4

Transforms and Differential Equations

. . . . . . . . . . . . . 128

3.5

Partial Fractions

. . . . . . . . . . . . . . . . . . . . . . . . . 129

3.6

Sufficient Conditions . . . . . . . . . . . . . . . . . . . . . . . 131

3.7

Convolution . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

3.8

Useful Functions and Functionals . . . . . . . . . . . . . . . . 140

3.9

Second Order Differential Equations . . . . . . . . . . . . . . . 144

3.10 Systems of Differential Equations . . . . . . . . . . . . . . . . 146

3.11 Heaviside Expansion Formula . . . . . . . . . . . . . . . . . . 148

3.12 Table of Laplace Transform Theorems . . . . . . . . . . . . . . 151

3.13 Table of Laplace Transforms . . . . . . . . . . . . . . . . . . . 152

3.14 Doing Laplace Transforms . . . . . . . . . . . . . . . . . . . . 155

3.15 Summary

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

2

List of Figures

1.1

Inverted Exponential . . . . . . . . . . . . . . . . . . . . . . .

6

1.2

Isoclines . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

20

1.3

Euler’s Method . . . . . . . . . . . . . . . . . . . . . . . . . .

24

1.4

Simple Difference Method . . . . . . . . . . . . . . . . . . . .

29

1.5

Closed Rectangle . . . . . . . . . . . . . . . . . . . . . . . . .

36

2.1

Connected Set . . . . . . . . . . . . . . . . . . . . . . . . . . .

63

2.2

Level Curves . . . . . . . . . . . . . . . . . . . . . . . . . . . .

66

2.3

A Closed Path . . . . . . . . . . . . . . . . . . . . . . . . . . .

91

3.1

Transform Pairs . . . . . . . . . . . . . . . . . . . . . . . . . . 115

3.2

The Heaviside Function . . . . . . . . . . . . . . . . . . . . . . 126

3.3

The Dirac Delta Function . . . . . . . . . . . . . . . . . . . . 126

3.4

Heaviside Function . . . . . . . . . . . . . . . . . . . . . . . . 153

3.5

Ramp Function . . . . . . . . . . . . . . . . . . . . . . . . . . 153

3.6

Shifted Heaviside . . . . . . . . . . . . . . . . . . . . . . . . . 153

3.7

Linearly Transformed Heaviside . . . . . . . . . . . . . . . . . 154

3.8

Linearly Transformed Ramp . . . . . . . . . . . . . . . . . . . 154

3.9

Sawtooth Function . . . . . . . . . . . . . . . . . . . . . . . . 154

3

List of Tables

1.1

Euler’s Method Calculations

. . . . . . . . . . . . . . . . .

25

1.2

An Approximation to e

x

. . . . . . . . . . . . . . . . . . . .

26

2.1

Table of Symbols

. . . . . . . . . . . . . . . . . . . . . . . .

64

2.2

Table of Exact Differentials

. . . . . . . . . . . . . . . . 100

2.3

Table of Common Abbreviations

. . . . . . . . . . . . . . . 101

3.1

Named Functions

. . . . . . . . . . . . . . . . . . . . . . . . 118

3.2

Laplace Transform Pairs

. . . . . . . . . . . . . . . . . . . 127

3.3

Theorem Table

. . . . . . . . . . . . . . . . . . . . . . . . . 152

4

Chapter 1

Introduction

1.1

First Encounters

Differential equations are of fundamental importance

in science and engineering because many physical laws

and relations are described mathematically by such

equations.

Roughly speaking, by an ordinary differential equation we mean a relation

between an

independent variable x, a function y of x, and

one or more of the derivatives y

0

, y

00

, . . . ,

y

(n)

of x. For example,

y

0

= 1 − y,

(1.1)

y

00

+ 4y = 3 sin x,

(1.2)

y

00

+ 2x(y

0

)

5

= xy

(1.3)

are ordinary differential equations.

In calculus we learn how to find the successive,

that is higher or higher order, derivatives of a given function y(x). Notice

that we use parentheses to distinguish y

(n)

from y

n

, the nth power of y. If

the function y depends on two or more independent variables, say x

1

, . . .,

x

m

, then the derivatives are partial derivatives and the equation is called a

partial differential equation.

5

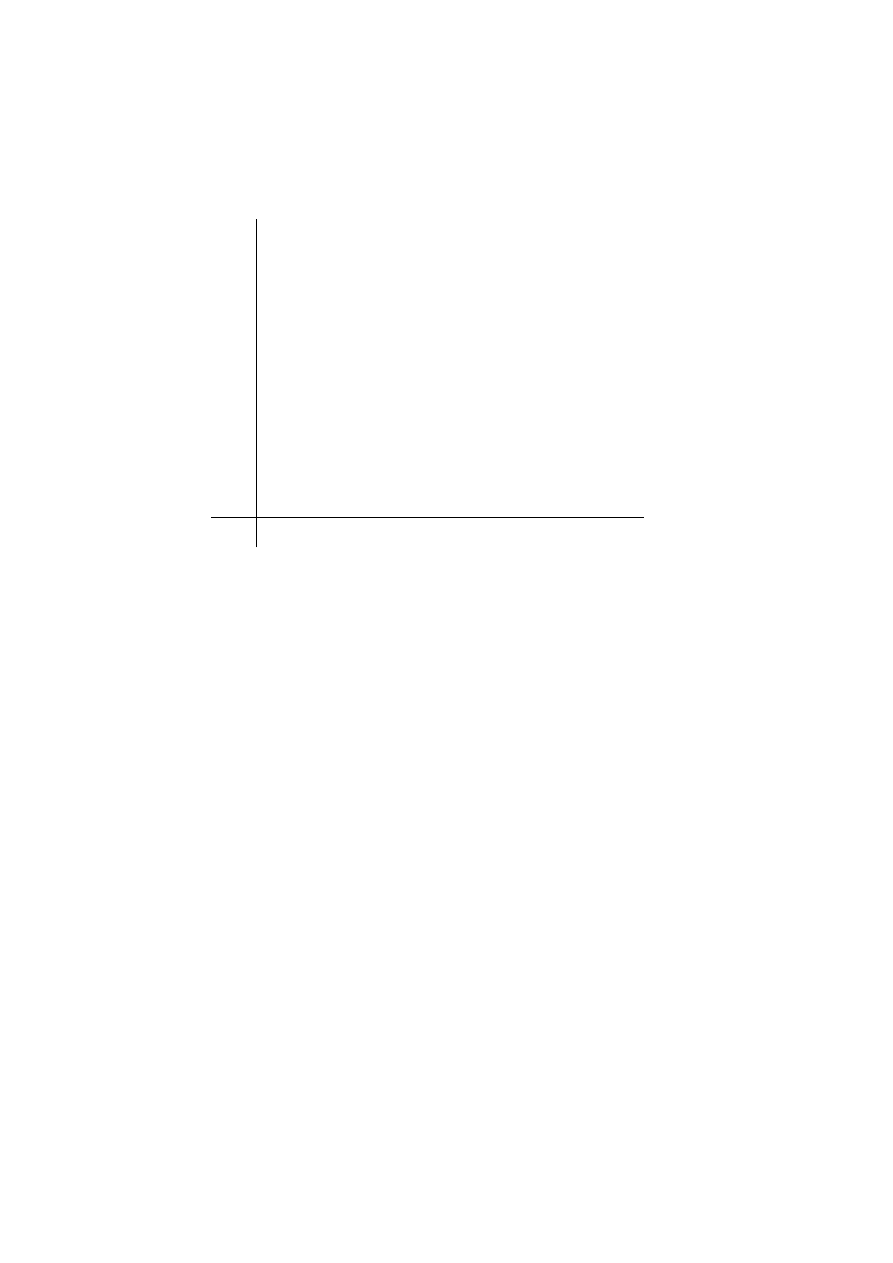

-

6

y

x

····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

·····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

····

······

········

············

············································

·····················································································································································································

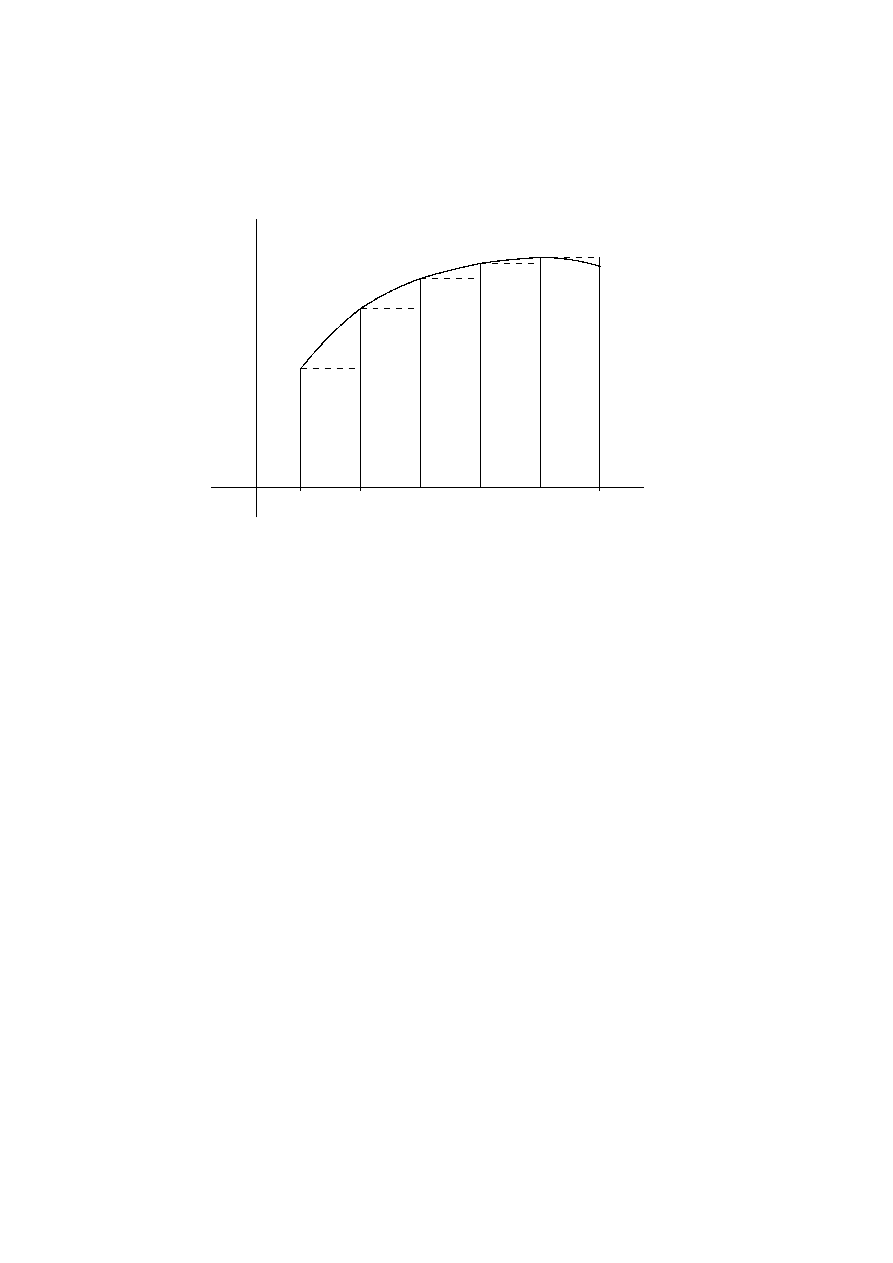

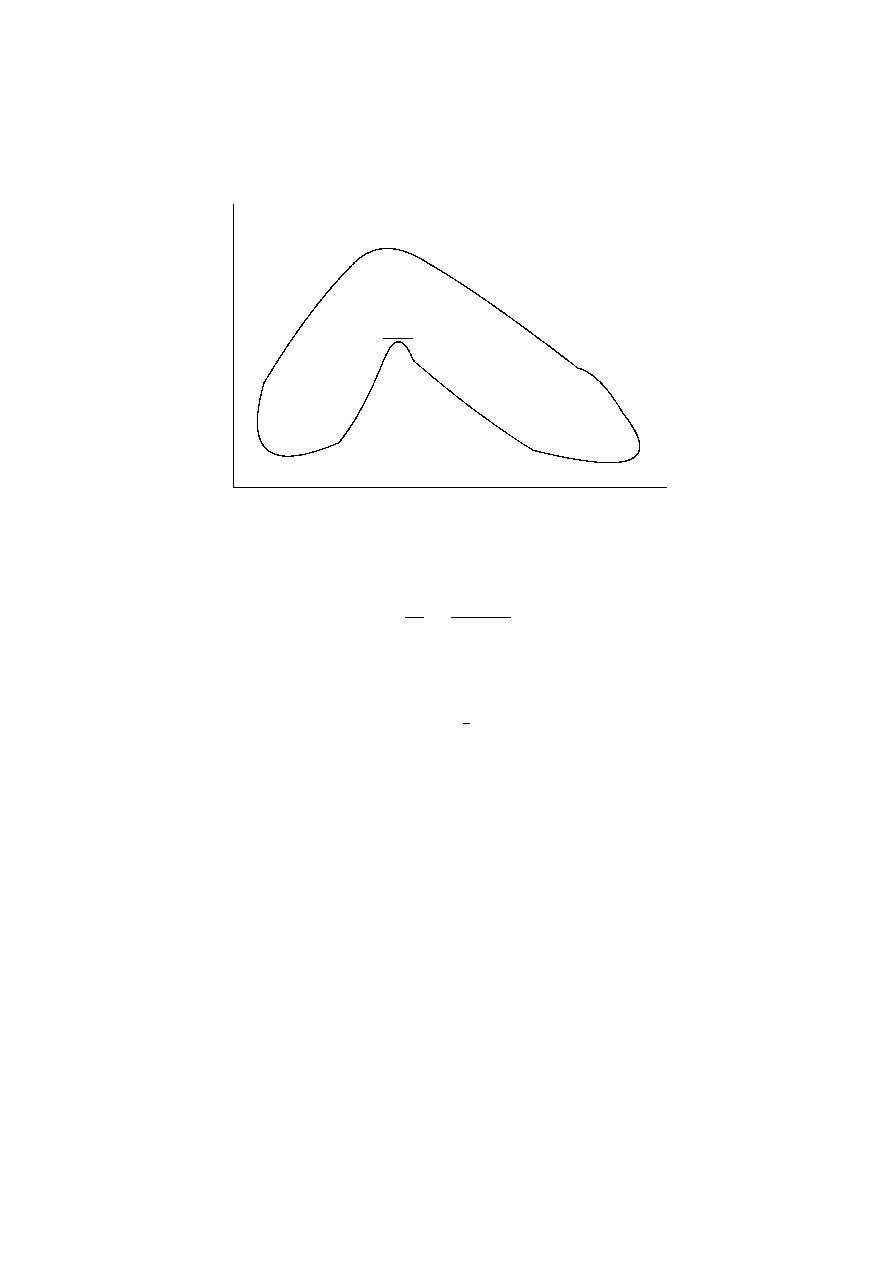

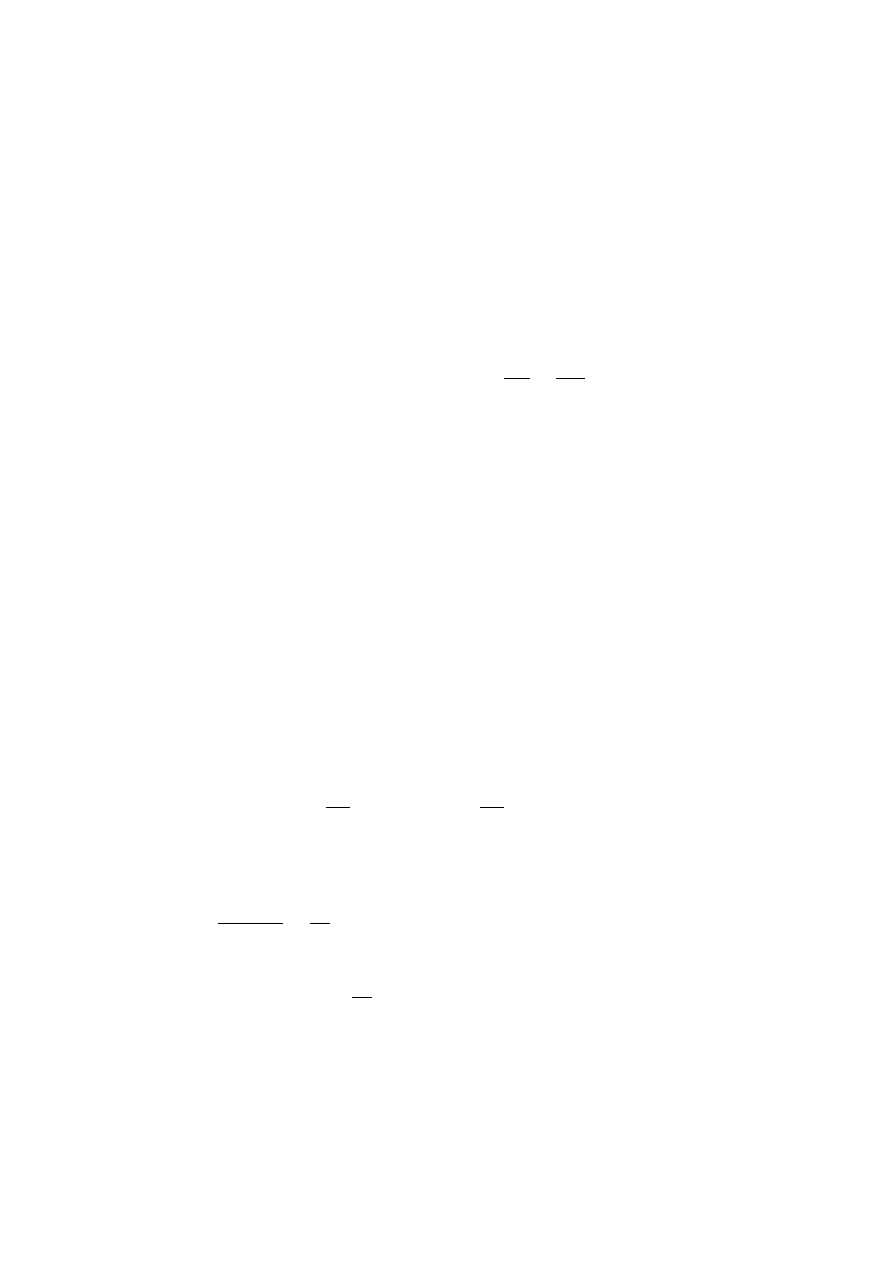

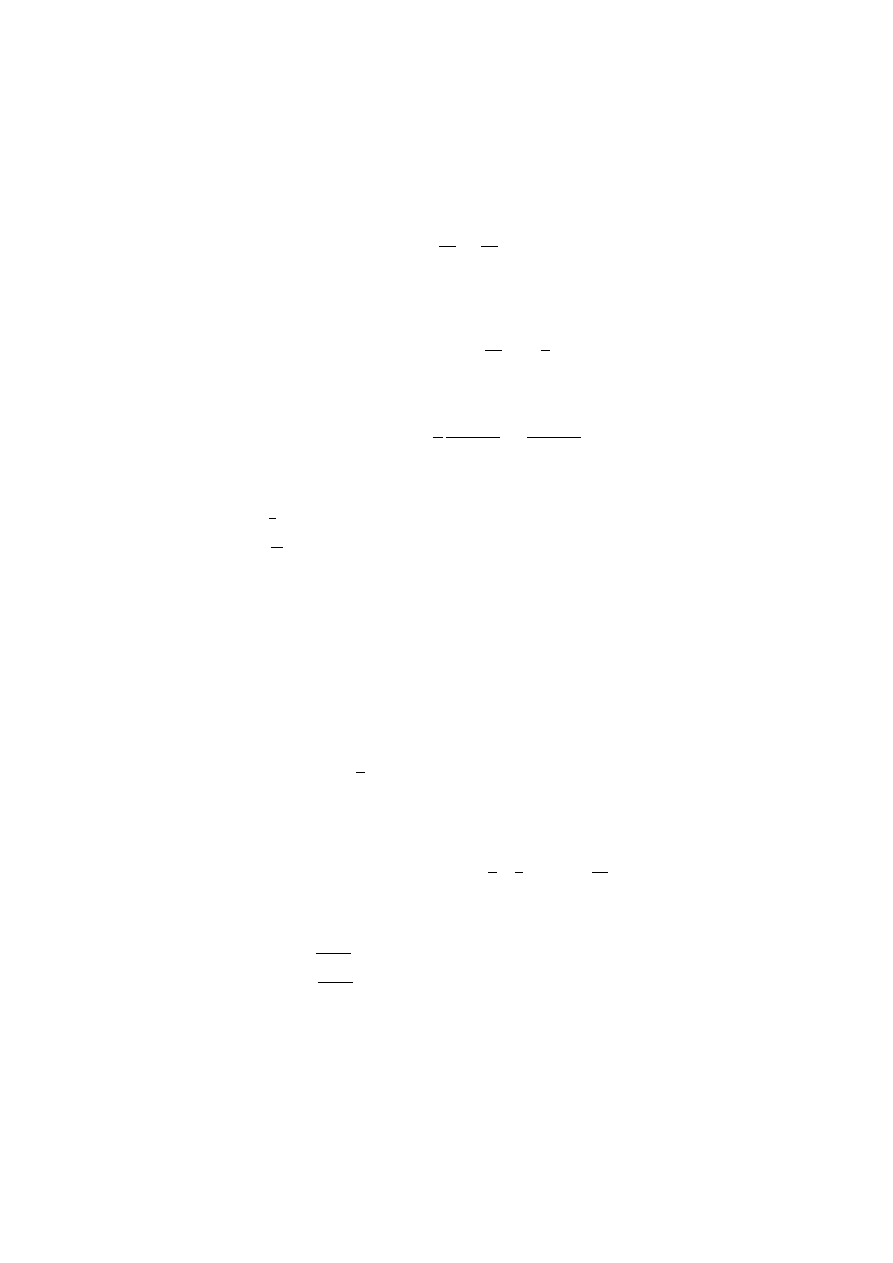

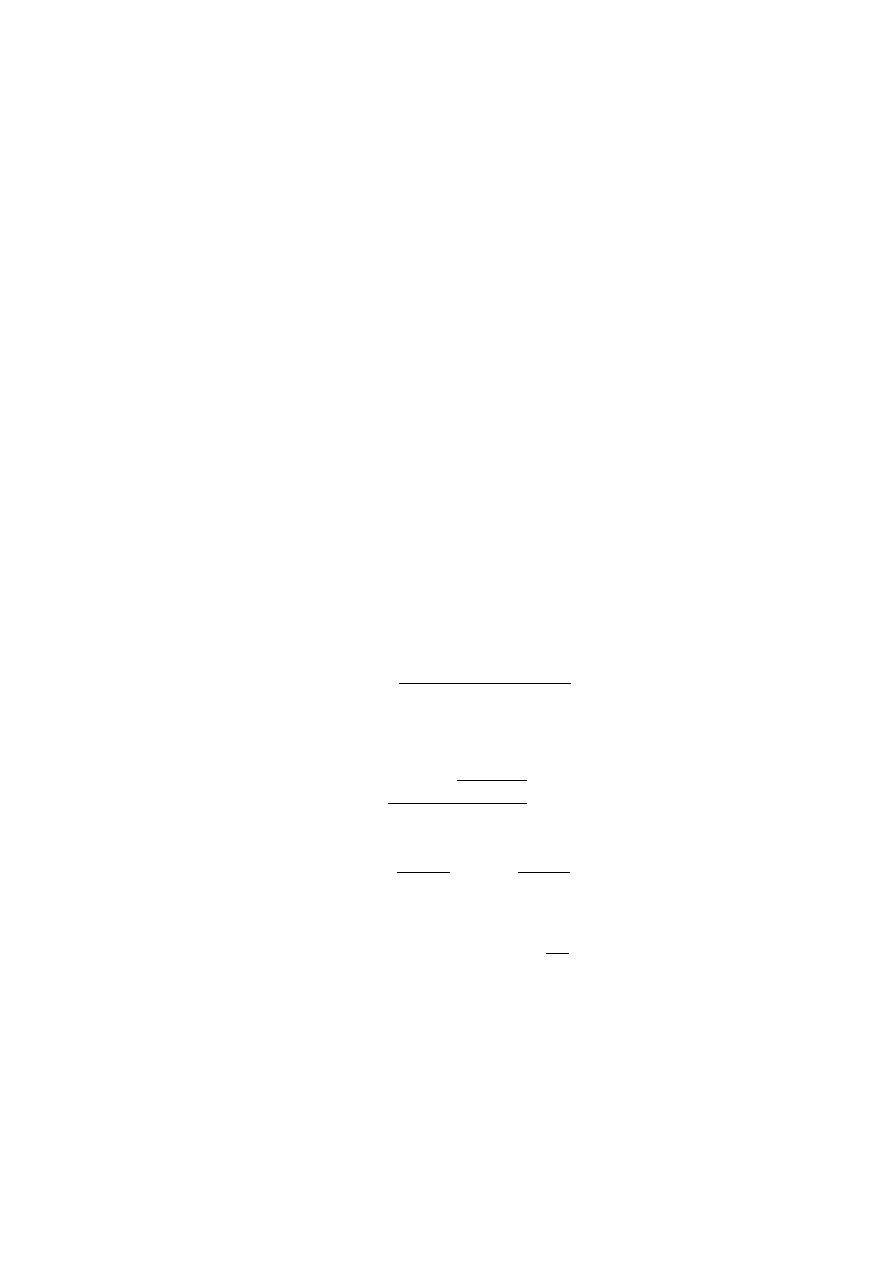

Figure 1.1:

The Curve y = 1 − e

−x

Definition 1 A differential equation is an equation which involves deriva-

tives of a dependent variable with respect to one or more independent vari-

ables.

As an example of a differential equation, if y = 1 − e

−x

, then

y

0

= e

−x

= 1 − y;

(1.4)

thus for this function the relation (1.1) is satisfied. We say that y = 1 − e

−x

is a solution of Equation (1.1). However, this is not the only solution, for

y = 1 − 2e

−x

also satisfies Equation (1.1):

y

0

= 2e

−x

= 1 − y.

We note that the function y = 1 − e

−x

is sometimes called the “inverted

exponential.” Curves of this type are seen in biology where a population is

limited by some factor, e.g., the food supply, and in reliability, where one is

concerned with component failures.

The first fundamental problem is how to determine

6

all possible solutions of a given differential

equation. Even for the simplest example of a

differential equation

dx

dx

= F (x)

or, equivalently

y

0

= F (x),

where F is an explicit function of x

alone, this may not be easy. In fact, entire tables

of integrals and symbolic mathematical software

cannot determine every such solution in closed

form. To solve this equation, one must find a

function y = f (x) whose derivative is the given

function F (x). The solution, y, is the so-called

antiderivative or indefinite integral of F (x).

There is a second fundamental problem.

For many differential equations it is

very difficult to obtain explicit formulas for all the

solutions. However, a general existence theorem guarantees

that there are solutions; in fact, infinitely many. The problem

is to determine properties of the solutions,

or of some of the solutions, from the differential equation

itself. Many properties can be found

without explicit formulas for the solutions;

we can, in fact, obtain numerical values for solutions to any

accuracy desired. Accordingly, we are led to regard a

differential equation itself as a sort of explicit formula

describing a certain collection of functions. For example,

we can show that all solutions of

Equation (1.2) are given by

y = sin x + c

1

cos 2x + c

2

sin 2x

where c

1

and c

2

are arbitrary constants.

Equation (1.2) itself, y

00

+ 4y = 3 sin x, is another

way of describing all these functions.

Historically, differential equations came into existence

at the same time as differential and integral calculus.

It is an artifact of the education system that they are studied

7

in colleges and universities after calculus.

A single differential equation may describe many

of the laws of nature in a unified and concise form,

due to the fact that several different functions can

each be a solution of one differential equation.

Thus, it is not surprising that most laws of physics

are in the form of differential equations.

One noteworthy example is the formulation of

Newton’s second law:

force = mass × acceleration.

Let m denote the mass of a particle constrained to more along

a straight line. The differential equation

m

d

2

x

dt

2

= F

dx

dt

, x, t

!

,

where x is the distance from an origin, t is time, and

and F is force, which depends on velocity dx/dt, x,

and t.

We will be studying the problem of obtaining a solution

as well as the theoretical problems associated with

existence, uniqueness, and the like.

The goal is to obtain explicit

expressions for the solutions wherever possible.

Such solutions are also referred to as

solutions in closed form.

In the course of study, we will observe that solutions

have different appearances, including, but not

limited to, infinite series. We will also try to

make qualitative statements about the

solutions, such as trajectories, graphs, asymptotes,

etc., directly from the differential equations.

It has been observed that, except for a few

special cases, there is no simple way of

solving ordinary differential equations.

If the unknown function and its derivatives

appear linearly in the differential equation,

8

then it is called a linear

differential equation; otherwise, it is said

to be nonlinear. Among the cases for which

simple methods of solution are possible are

the linear equations.

This is fortunate because of the frequency

with which they occur in scientific phenomena.

In fact, many of the fundamental laws of science

are formulated in terms of linear ordinary

differential equations. Consequently, we will

devote a majority of this book to such equations.

1.2

Basic Terminology

An ordinary differential equation of

order n is an equation of the form

F

x, y,

dy

dx

, . . . ,

d

n

y

dx

n

!

= 0.

(1.5)

The above equation involves an unknown function, F ,

and one or more its derivatives, y

(k)

= d

k

y/dx

k

,

where k = 1, 2, . . . , n. Of course, if k = 0, we have

y

(0)

≡ y and equation (1.5)

reduces to an algebraic equation in x and y.

Moreover, y

(1)

≡ y

0

. (Sometimes we

write y

(iv)

instead of y

0000

or y

(4)

.)

For example, observe that

xy

00

+ 3y

0

− 2y + xe

x

= 0,

(1.6)

(y

000

)

2

− 4y

0

y

000

+ (y

00

)

3

= 0

(1.7)

are ordinary differential equations of orders 2

and 3, respectively.

If a differential

equation has the form of an algebraic equation

of degree m in the highest derivative, then we

9

say that the differential equation is of

degree m.

For example, Equation (1.7) is of

degree 2 in its highest

derivative, y

000

, whereas Equation (1.6)

is of degree 1.

In an introductory course, one generally is

restricted to equations of the

first degree.

Moreover, the leading coefficient of the highest

order y

(n)

is generally 1 so that we have

an expression of the form

y

(n)

= ˜

F

x, y, . . . , y

(n−1)

.

A linear ordinary differential

equation is a restriction of Equation (1.5)

to the form

b

0

(x)y

(n)

+ b

1

(x)y

(n−1)

+ · · · + b

n−1

(x)y

0

+ b

n

(x)y = Q(x).

(1.8)

This corresponds to an algebraic equation where the

coefficients are replaced by functions and the powers

are replaced by derivatives, for example:

a

0

t

n

+ a

1

t

n−1

+ · · · + a

n−1

t + a

n

= 0,

where t

0

≡ 1 and the “driving function,”

Q(x), is absorbed into the coefficient a

n

.

This phenomenon will be a recurring theme throughout

all of differential equations, operator theory,

transform calculus, and tensor analysis. Familiar,

common notions will be extended by more general,

complicated concepts. In turn, the more general

concepts will reduce to basic ideas and many of the

theorems and facts can be generalized.

Each of the Equations (1.1), (1.2),

and (1.6) above is linear.

In particular, can write the coefficients

10

for Equation (1.6) as follows:

b

0

(x) := x,

b

1

(x) := 3,

b

2

(x) := −2,

Q(x) := −xe

x

.

It is true that every linear differential equation

is always of degree 1. The converse does not

hold, as one can see from Equation (1.3),

which is of first degree (in its lead coefficient)

and nonlinear (in its first derivative).

The word “ordinary” implies that

there is just one independent

variable. If we have a function of two

or more independent variables, say U (x, y, z),

it is possible to have partial derivatives.

An equation such as

∂

2

U

∂x

2

+

∂

2

U

∂y

2

+

∂

2

U

∂z

2

= 0

is called a partial differential equation.

In this book, with a few notable exceptions, we will be

concerned only with ordinary

differential equations. This being the case,

the word “ordinary” will generally be omitted.

1.3

Solutions

Definition 2 A function y = f (x), defined on some interval

a < x < b (possibly infinite), is said to be a

solution of the differential

equation

F

x, y, y

0

, . . . , y

(n)

= 0

(1.9)

if Equation (1.9) is identically

satisfied whenever y and its derivatives

are replaced by f (x) and its derivatives.

Of course, it is implied in the definition that

if the differential equation defined by

11

Equation (1.9) is of order n

then f (x) has at least n derivatives

throughout the interval (a, b). Moreover,

whatever is valid for a general solution also

holds for a particular solution.

One may observe that the function y = f (x)

is defined on an open interval,

a < x < b, and not on a closed

interval, a ≤ x ≤ b. It is generally true

that derivatives are studied on open sets whereas

continuous functions (and integrals) are studied on

closed sets.

As an example of a solution, suppose that

each of c

1

and c

2

is a constant. The equation,

which is related to Equation (1.2),

y = c

1

cos 2x + c

2

sin 2x

is a solution of the differential equation

y

00

+ 4y = 0

(1.10)

since y

00

= −4c

1

cos 2x − 4c

2

sin 2x, so that

y

00

+ 4y = (−4c

1

cos 2x − 4c

2

sin 2x) + 4 (c

1

cos 2x + c

2

sin 2x) ≡ 0.

Many differential equations have solutions

that can be concisely written as

y = f (x; c

1

, . . . , c

n

) ,

(1.11)

where each of c

1

, . . . , c

n

is an arbitrary constant.

(Two or more of the c’s can assume the same value.)

It is not always possible to let the c’s assume any

real value for unrestricted values of x; however,

for given values of the c’s and an admissible

range of values of the independent variable, x,

Equation (1.11) gives all of the solutions of

Equation (1.9).

12

For example, all of the solutions of

Equation (1.10) are given by

y = c

1

cos 2x + c

2

sin 2x;

(1.12)

the solution y = cos(2x) is obtained when c

1

= 1,

c

2

= 0. When a function (1.11) is

obtained, providing all solutions,

it is called the general solution.

In general, the number of arbitrary constants will

equal the order of n, as will be explained in

Section 1.7. However, there

may be exceptions.

(y

0

)

2

+ y

2

= 0

has exactly one solution, that is y ≡ 0.

In order to gain some experience with the

preceding material, we will preview some of the

material from Section 1.6.

The differential equation

in that section is just

y

0

= F (x),

(1.13)

where F (x) is defined and continuous for

a ≤ x ≤ b. All

possible solutions of Equation (1.13)

come from the integral equation

y =

Z

F (x) dx + c

1

,

a < x < b.

(1.14)

We observe that the arbitrary constant

of the differential equation

is simply the so-called constant of integration

from the indefinite integral. This

generalizes to higher order differential

equations. For example, suppose that

y

00

= 30x.

(1.15)

13

Integrate y

00

twice to obtain

y

0

= 15x

2

+ c

1

,

y = 5x

3

+ c

1

x + c

2

.

(1.16)

Successive integration of

Equation (1.15), which is

or order two, has produced exactly

two arbitrary constants, c

1

, c

2

.

Problems

1. Classify each of the following differential equations

as to order, degree, and linearity:

(a) y

00

+ 3y

0

+ 6y = 0,

(b) y

0

+ P (x)y = Q(x),

(c) (y

0

)

2

= x

3

− y,

(d) y

00

− 2(y

0

)

2

+ xy = 0,

(e) (y

0

)

2

+ 9xy

0

− y

2

= 0,

(f) x

3

y

00

− xy

0

+ 5y = 2x,

(g) y

(vi)

− y

00

= 0,

(h) sin(y

00

) + e

y

0

= 1.

2. Integrate each of the following differential equations

and include constants of integration as arbitrary constants to

give the general solution:

(a) y

0

= xe

x

,

(b) y

000

= 0,

(c) y

00

= x,

(d) y

(n)

= x,

(e) y

0

= log x,

(f) y

0

= 1/x.

14

3. Show that the function y = f (x) is a solution of

the given differential equations:

(a) y = e

x

, for y

00

− y = 0,

(b) y = cos 2x, for y

(iv)

+ 4y

00

= 0,

(c) y = c

1

cos 2x + c

2

sin 2x (each of c

1

and c

2

is a constants), for y

00

+ 4y = 0,

(d) y = sin (e

x

), for y

00

− y

0

+ e

2x

y = 0.

4. Consider the differential equation y

0

= 3x

2

.

(a) Verify that y = x

3

+ c

1

is the general solution;

(b) Determine c

1

such that the solution curve passes through

(1, 3);

(c) Determine c

1

so that the solution

satisfies the integral equation

R

1

0

y(x) dx =

1

2

.

5. Discuss how a differential equation is a

generalization of an algebraic equation.

6. Write a computer program to generate the data points

for Figure 1.1.

Solutions

1. Classify each of the following differential equations

as to order, degree, and linearity:

(a) y

00

+ 3y

0

+ 6y = 0,

2nd order, 1st degree, linear;

15

(b) y

0

+ P (x)y = Q(x),

1st order, 1st degree, linear;

(c) (y

0

)

2

= x

3

− y,

1st order, 2nd degree, nonlinear;

(d) y

00

− 2(y

0

)

2

+ xy = 0,

2st order, 1st degree, nonlinear;

(e) (y

0

)

2

+ 9xy

0

− y

2

= 0,

1st order, 2nd degree, nonlinear;

(f) x

3

y

00

− xy

0

+ 5y = 2x,

2nd order, 1st degree, linear;

(g) y

(vi)

− y

00

= 0,

6th order, 1st degree, linear;

(h) sin(y

00

) + e

y

0

= 1,

2nd order, unknown degree, nonlinear.

2. Integrate each of the following differential equations

and include constants of integration as arbitrary constants to

give the general solution:

(a) y

0

= xe

x

,

for all real x, y = xe

x

− e

x

+ c

1

;

(b) y

000

= 0,

for all real x, y = c

1

x

2

+ c

2

x + c

3

;

(c) y

00

= x,

for all real x, y =

1

6

x

3

+ c

1

x + c

2

;

(d) y

(n)

= x,

for all real x, y =

1

(n+1)!

x

n+1

+c

1

x

n−1

+c

2

x

n−2

+

· · · + c

n

;

(e) y

0

= log x,

for all x > 0, y = x log(x) − x + c

1

;

(f) y

0

= 1/x

for all x 6= 0 and c

1

> 0, y = log (c

1

|x|).

3. Show that the function y = f (x) is a solution of

the given differential equations:

(a) y = e

x

, for y

00

− y = 0,

y

0

= e

x

,

y

00

= e

x

,

16

y

00

− y = e

x

− e

x

= 0,

by substitution.

(b) y = cos 2x, for y

(iv)

+ 4y

00

= 0,

y = cos 2x,

y

0

= −2 sin 2x,

y

00

= −4 cos 2x,

y

000

= 8 sin 2x,

y

(iv)

= 16 cos 2x.

y

(iv)

+ y

00

= 16 cos 2x − 16 cos 2x = 0,

by successive differentiation and substitution.

(c) y = c

1

cos 2x + c

2

sin 2x (each of c

1

and c

2

is a constants), for

y

00

+ 4y = 0,

y

0

= −2c

1

sin 2x + 2c

2

cos 2x,

y

00

= −4c

1

cos 2x − 4c

2

sin 2x.

y

00

+ 4y = −4c

1

cos 2x − 4c

2

sin 2x + 4 (c

1

cos 2x + c

2

sin 2x) = 0

by differentiation and substitution.

(d) y = sin (e

x

), for y

00

− y

0

+ e

2x

y = 0.

y

0

= e

x

cos (e

x

) ,

y

00

= e

x

cos (e

x

) − e

2x

sin (e

x

) .

y

00

− y

0

+ e

2x

y =

e

x

cos (e

x

) − e

2x

sin (e

x

) − [e

x

cos (e

x

)] + e

2x

sin (e

x

) = 0

by direct substitution.

4. Consider the differential equation y

0

= 3x

2

.

17

(a) Verify that y = x

3

+ c

1

is the general solution.

Differentiate.

dy/dx = y

0

= 3x

2

.

(b) Determine c

1

such that the solution curve passes through (1, 3).

Solve the algebraic equation 3 = 1

3

+ c

1

for c

1

.

By inspection, c

1

= 2.

(c) Determine c

1

so that the solution

satisfies the integral equation

R

1

0

y(x) dx =

1

2

.

Integrate y(x) = x

3

+ c

1

from 0 to 1 and require

the definite integral to be equal to

1

2

.

Z

1

0

x

3

+ c

1

dx =

x

4

4

1

0

+ c

1

x

1

0

=

1

2

.

1

4

+ c

1

=

1

2

,

thus

c

1

=

1

4

.

5. Discuss how a differential equation is a

generalization of an algebraic equation.

Starting with an equation

a

0

t

n

+ a

1

t

n−1

+ · · · + a

n−1

t + a

n

= 0,

observe that t

1

= t and t

0

= 1. This gives

a

0

t

n

+ a

1

t

n−1

+ · · · + a

n−1

t

1

+ a

n

t

0

= 0.

Substitute b

k

(x) for a

k

for all k = 0, 1, . . . , n

and substitute y

(k)

for t

k

, with the understanding

that y

(0)

is the function y itself. (Also, y

0

:= y

(1)

.)

b

0

(x)y

(n)

+ b

1

(x)y

(n−1)

+ · · · + b

n−1

(x)y

0

+ b

n

y = 0.

The solution of an algebraic equation is a number

set (either real or complex). On the other hand,

the solutions set of a differential equation is

made up of functions. The last step is to add a

”driving function” Q(x) and the generalization

is complete.

18

6. Write a computer program to generate the data points for Figure 1.1.

The plotting area in the figure is approximately

300 points by 200 points, with 72 points per inch.

With scaling by points, we can write a BASIC (Beginners’

All-purpose Symbolic Instruction Code) program.

100 FOR k = 0 TO 10 STEP .1

110 y = 200 * (1 − EXP( − k / 20) )

120 PRINT k, y

130 NEXT k

140 FOR k = 10 TO 20 STEP .2

150 y = 200 * (1 − EXP( − k / 20) )

160 PRINT k, y

170 NEXT k

180 FOR k = 20 TO 300

190 y = 200 * (1 − EXP( − k / 20) )

200 PRINT k, y

210 NEXT k

220 END

1.4

Computers and Differential Equations

The success that digital computers enjoyed

in solving algebraic equations, both numerically

and symbolically, quickly extended itself to

differential equations. This should not be

surprising because differential equations can

be considered as a generalization, in some

sense, of algebraic equations. For example,

each solution of the algebraic equation

x

2

+ 3x + 1 = 0 is simply a number; each solution

of a differential equation is a function.

Computers have also added plotting and

interactive graphics capabilities to the

19

-

6

x

y

1

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

•

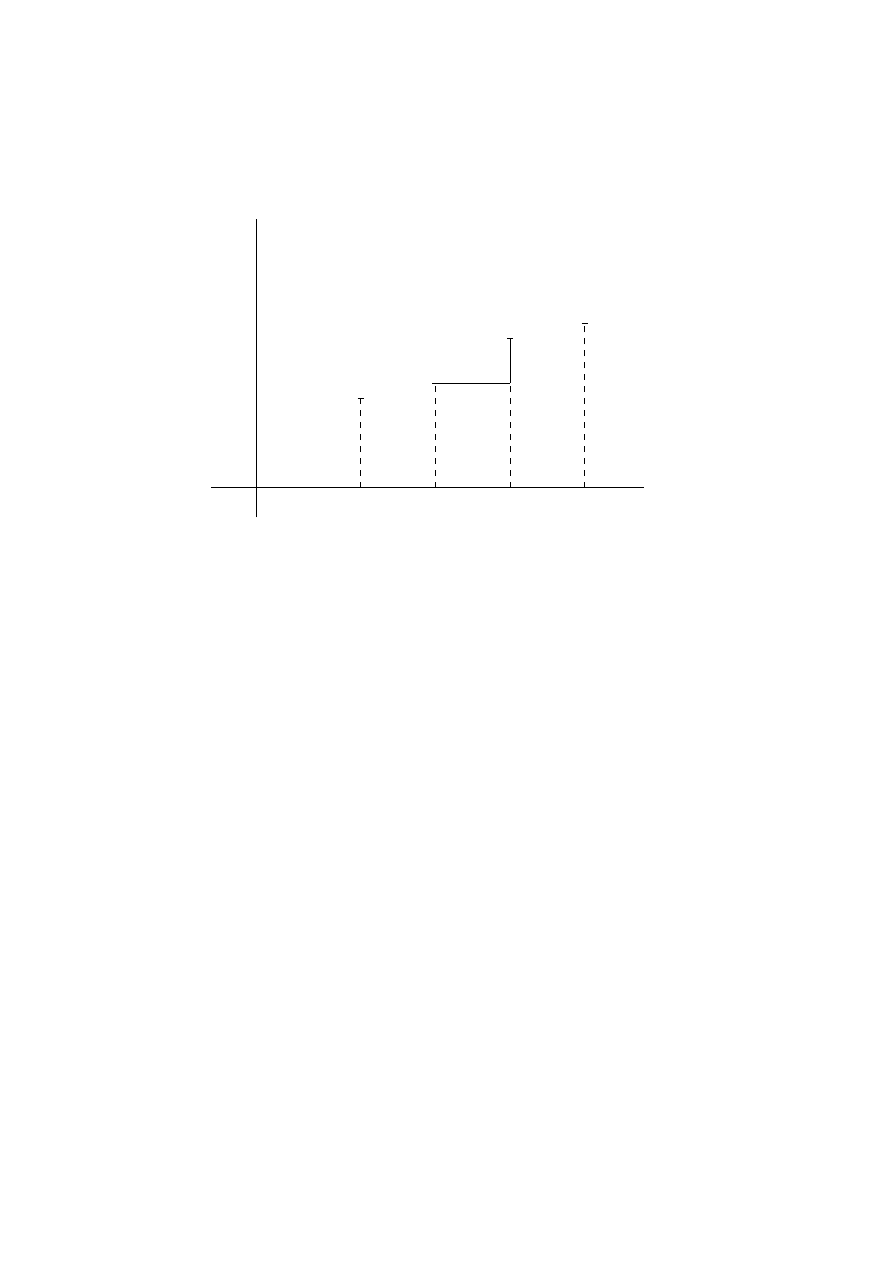

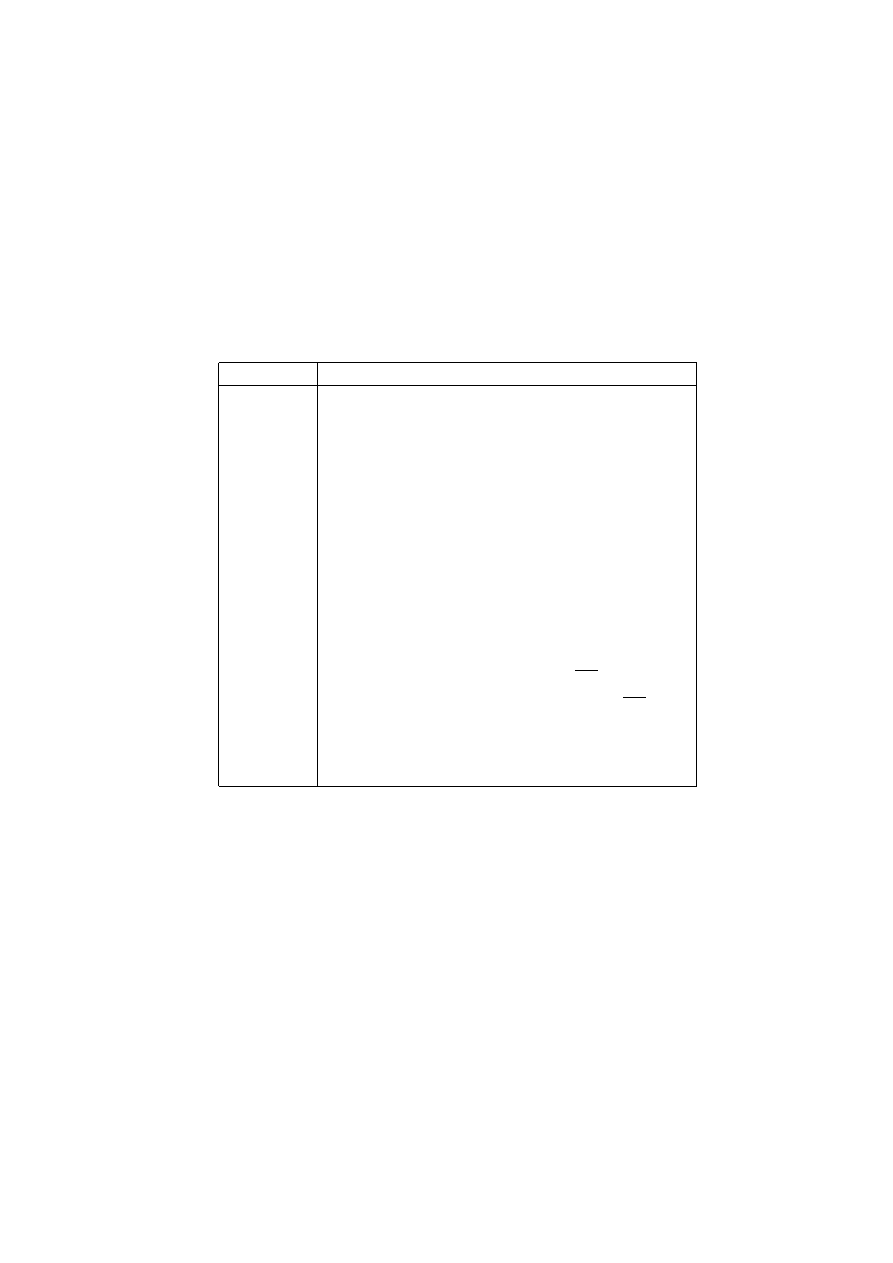

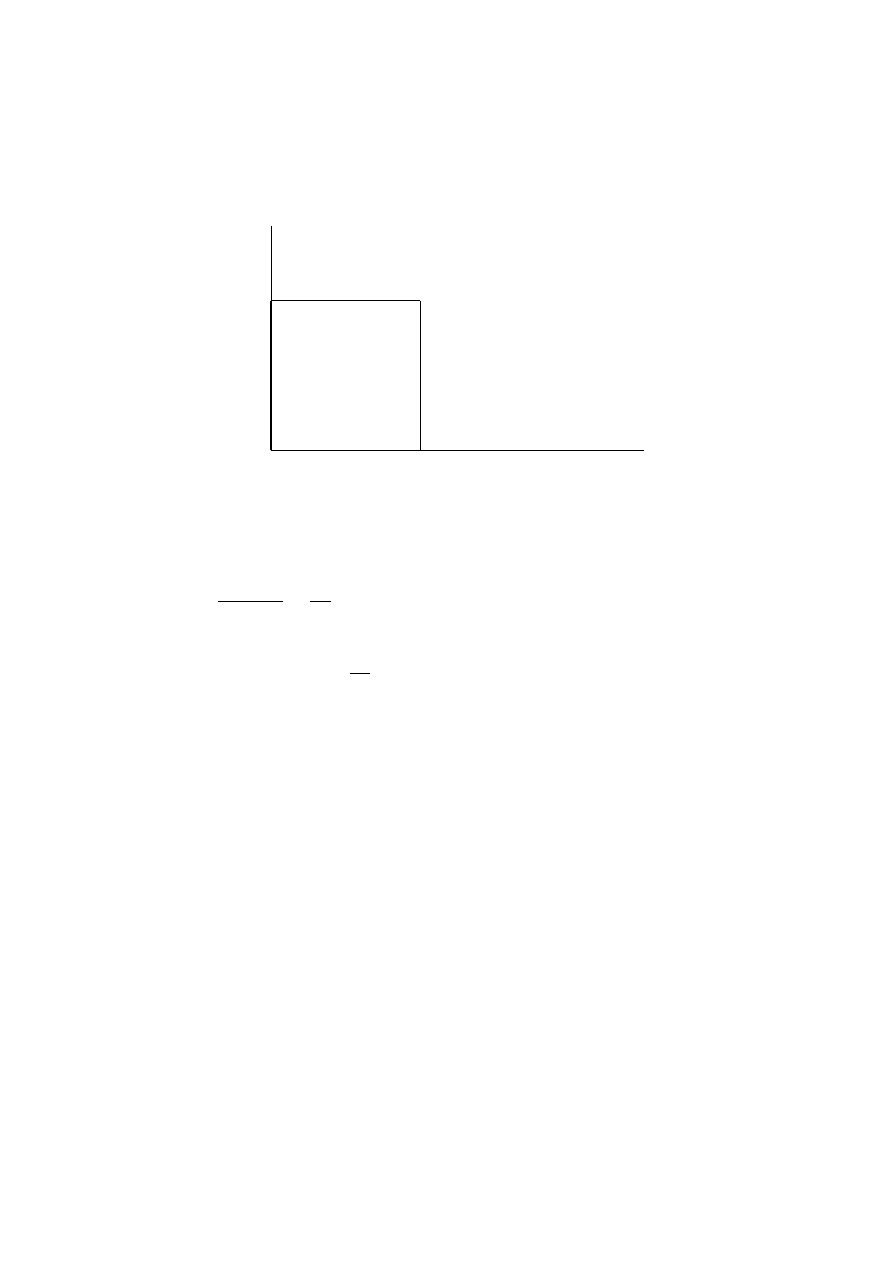

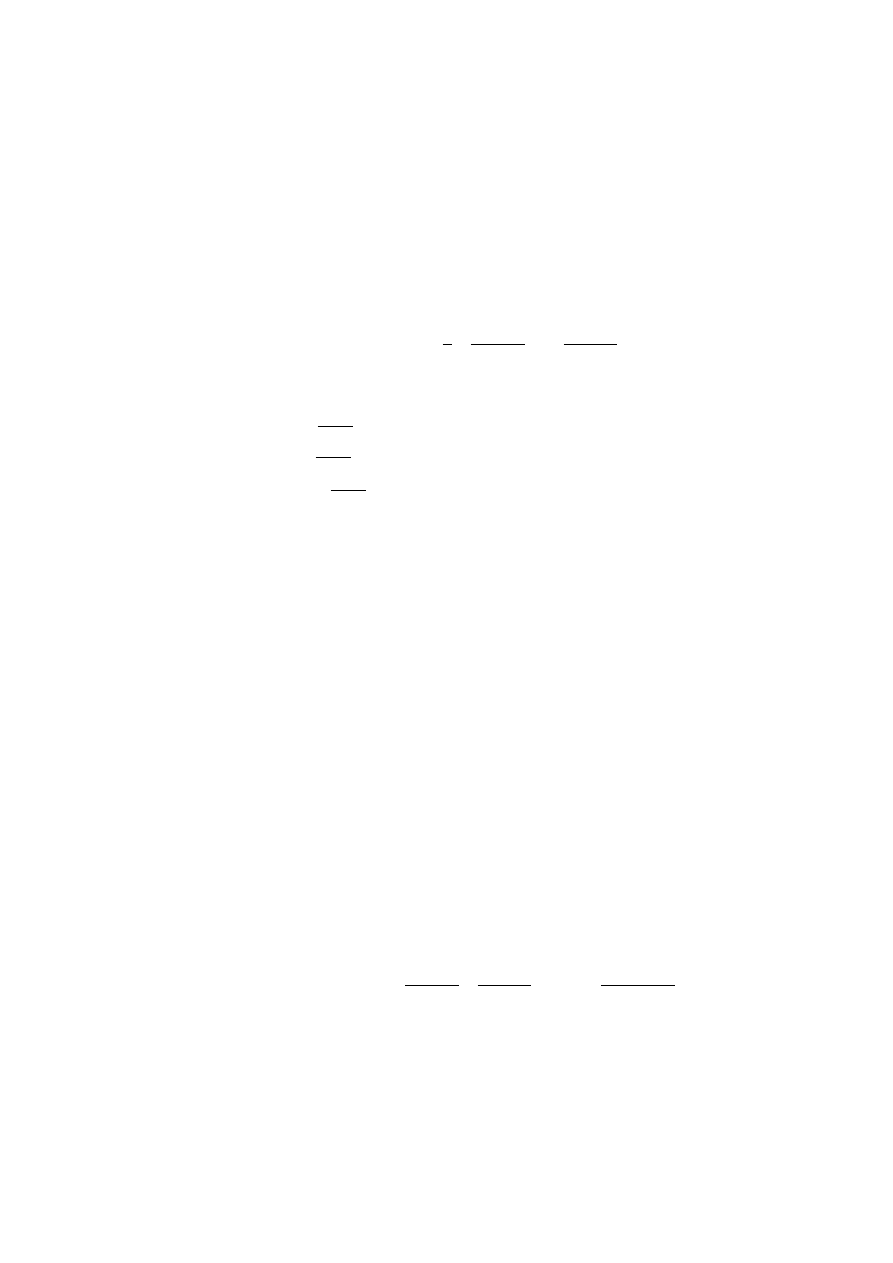

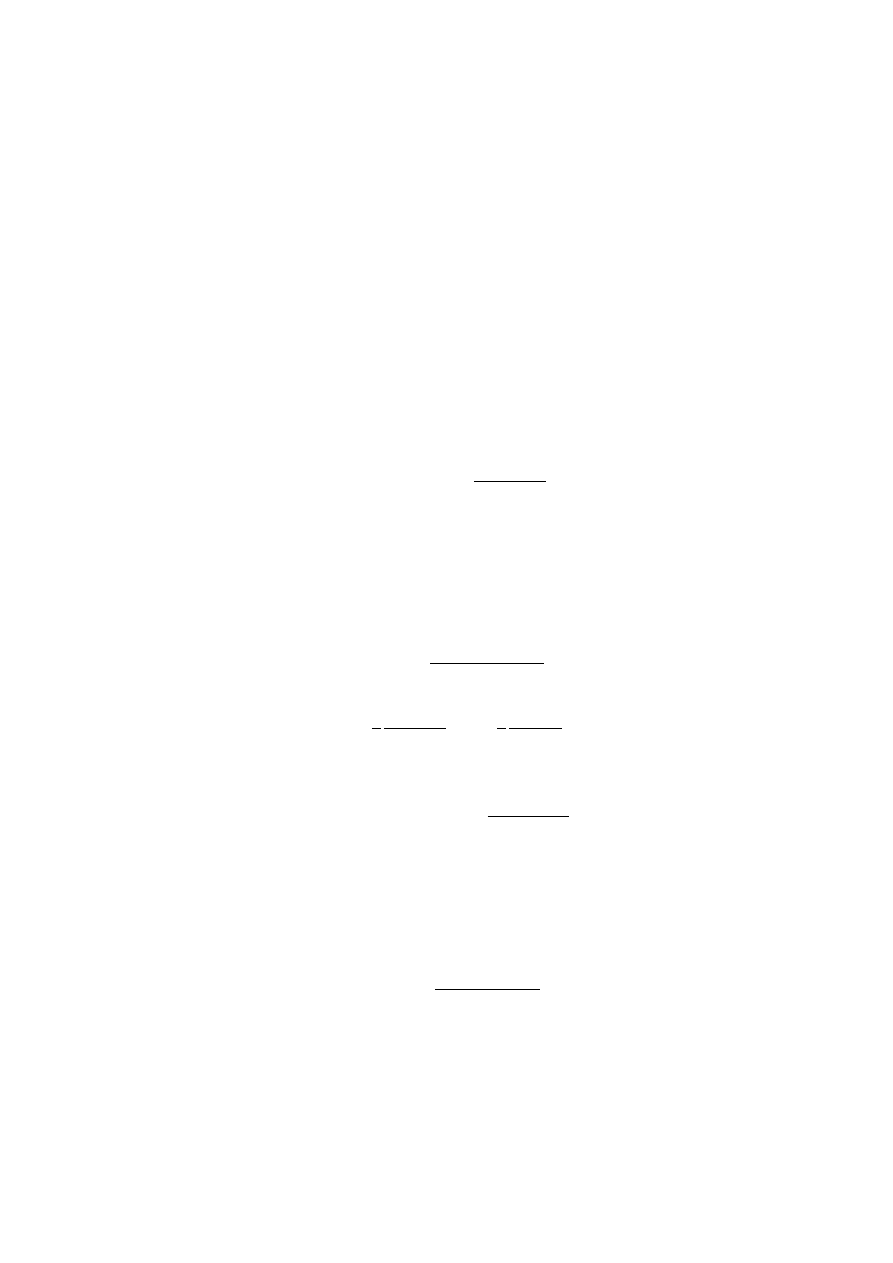

Figure 1.2:

Isoclines of y

0

= x

2

+ y

2

traditional quantitative numerical tables.

Modern computer software delivers an

approximating function to any differential

equation which cannot be solved explicitly.

This approximating function enables the user

to retrieve output in any desired format:

tables, plots, graphs, etc. The geometric

interpretation of first order differential

equations has been rendered obsolete by this

technology. The process of determining

solutions by isoclines,

once the darling of the numerical analyst,

is now viewed as a fond and vain thing no

longer worth the time to study.

For purely historical reasons, however,

we will touch on the topic of isoclines.

The equation

20

{{−0.0355234}, {0.0749577}, {0.166858},

{0.243498}, {0.30751}, {0.361096}, {0.406214},

{0.444702}, {0.478376}, {0.509112}, {0.53891},

{0.569969}, {0.604761}, {0.646141}, {0.697497},

{0.76299}, {0.847958}, {0.95961}, {1.10828},

{1.30986}, {1.59084}, {2.}, {2.63986}}

y

0

= F (x, y)

(1.17)

has a very simple geometric interpretation.

One can construct from the function F (x, y) above

a very useful graphical method to qualitatively

describe the solutions of Equation (1.17)

without actually obtaining a solution of the

form y = f (x). This is done by letting the

equation F (x, y) be constant and plotting

curves in the xy-plane called

isoclines, or curves of constant slope.

Each isocline is determined by the equation

F (x, y) = m

(1.18)

where m is a fixed constant. For example, consider

the first order differential equation

dy

dx

= x

2

+ y

2

.

(1.19)

The isoclines are concentric circles centered at the origin.

(See the Figure 1.2.) We use some modern

computer software to obtain a solution through the point

(1, 2). The software indicates that a closed form, or

explicit solution, cannot be obtained. We settle for a

numerical solution and get a table of values for y in

terms of x. For this example we let x march from

−1.1 to 1.1 with a step size of 0.1.

The software also produces an outstanding graph, which is

21

available as a computer graphics file.

This method can be helpful in getting a qualitative

knowledge of a solution curve in engineering problems

involving first order differential equations whose

solution set cannot be expressed in terms of known

functions or where finding a solution is mathematically

intractable.

1.5

Euler’s Method

To obtain a numerical solution to a differential

equation, each of the arbitrary constants must have

a definite value. One might view the numerical

techniques as a generalization of the concept of

the definite integral

R

b

a

F (x) dx. On the

other hand, closed form, symbolic solutions

may be considered as a generalization of the

so-called indefinite integral

R

F (x) dx + c

1

.

From an indefinite integral and a particular point

(x

0

, y

0

), with a < x

0

< b, one may compute

the particular solution, f (x).

f (x

0

) := y

0

=

Z

x

0

x

0

F (t) dt + c

1

implies that c

1

= y

0

so that

f (x) = y

0

+

Z

x

x

0

F (t) dt.

Using this construction,

the solution curve { (x, y) | a < x < b, y = f (x)},

passes through the point (x

0

, y

0

) and there

are no arbitrary constants. This is referred to

as an initial value problem

and the subsidiary conditions that determine the

values of the arbitrary constants are known as

initial values. By placing some restrictions

on the function F one may ensure that the initial

22

value problem has a unique solution. The etymology

of the expression “initial value” lies in the

problem of determining the behavior of the motion

of a particle moving along a constrained path and

subject to known forces. Knowing the initial

position and the initial velocity is sufficient to

uniquely determine all future behavior of the

particle.

The simplest, non-trivial initial value problem is

that of determining a particular solution to

y

0

≡

dx

dy

= F (x, y),

(1.20)

where each of F and F

y

≡ ∂F/∂y

is a continuous function in some rectangular region R

having (x

0

, y

0

) in its interior. For a given set

of discrete points x

0

< x

1

< · · · < x

n−1

< x

n

we wish

to compute a table of approximate numerical values

(x

k

, y

k

) to points on the solution curve

(x

k

, f (x

k

)). There are several ways of doing

this, the most elementary being a simple difference

method known as Euler’s method.

The idea is straightforward enough. Starting with

(x

0

, y

0

) and a step of size ∆x := x

1

− x

0

compute

∆y := F (x

0

, y

0

) ∆x

(1.21)

then set x

1

= x

0

+ ∆x and y

1

:= y

0

+ ∆y.

Repeat this procedure, redefining the increment

∆x so that ∆x := x

2

− x

1

and

computing

∆y := F (x

1

, y

1

) ∆x

to obtain

y

2

:= y

1

+ ∆y = y

1

+ F (x

1

, y

1

) · [x

2

− x

1

] .

23

-

6

x

0

x

1

x

2

x

3

y

x

•

•

•

•

∆x

∆y

"

"

"

"

"

a

a

a

a

a

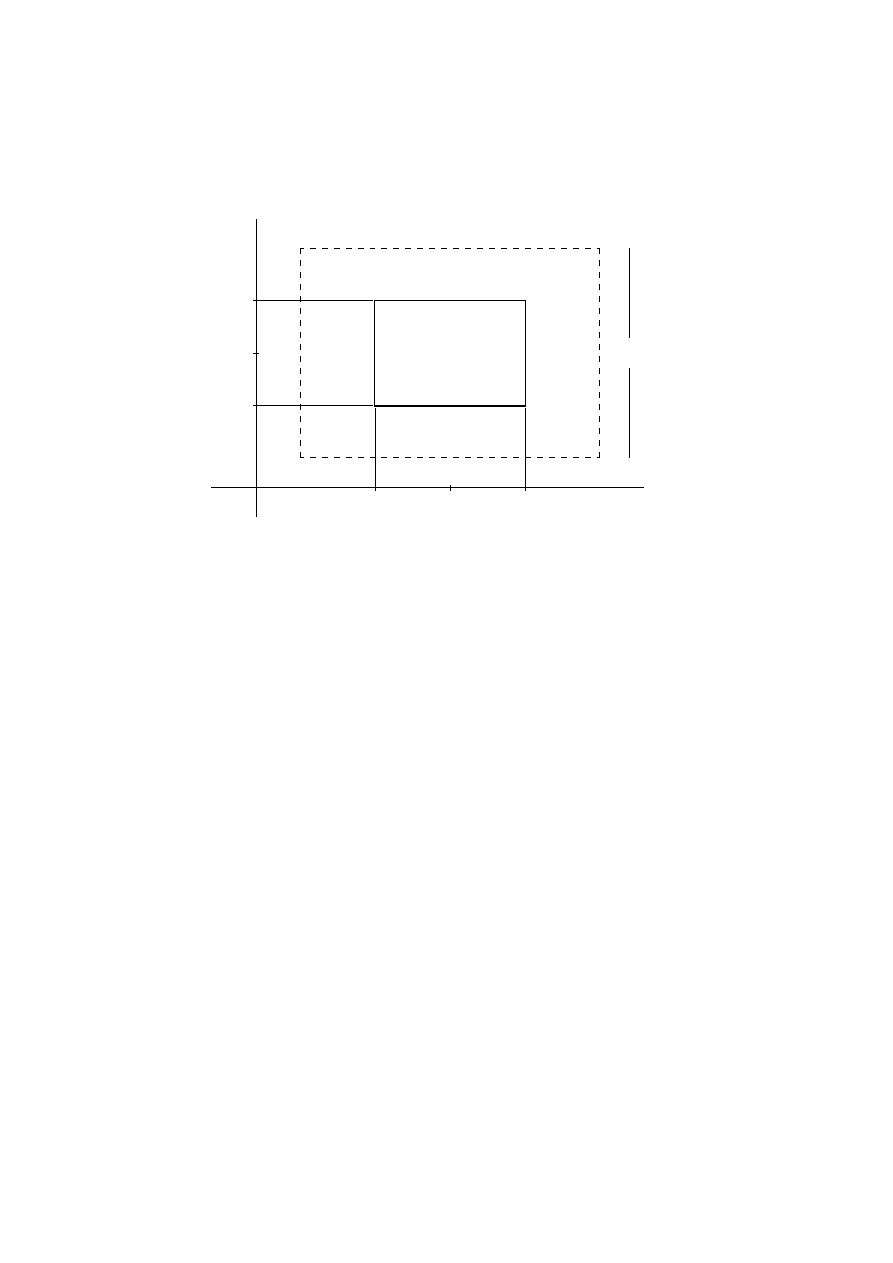

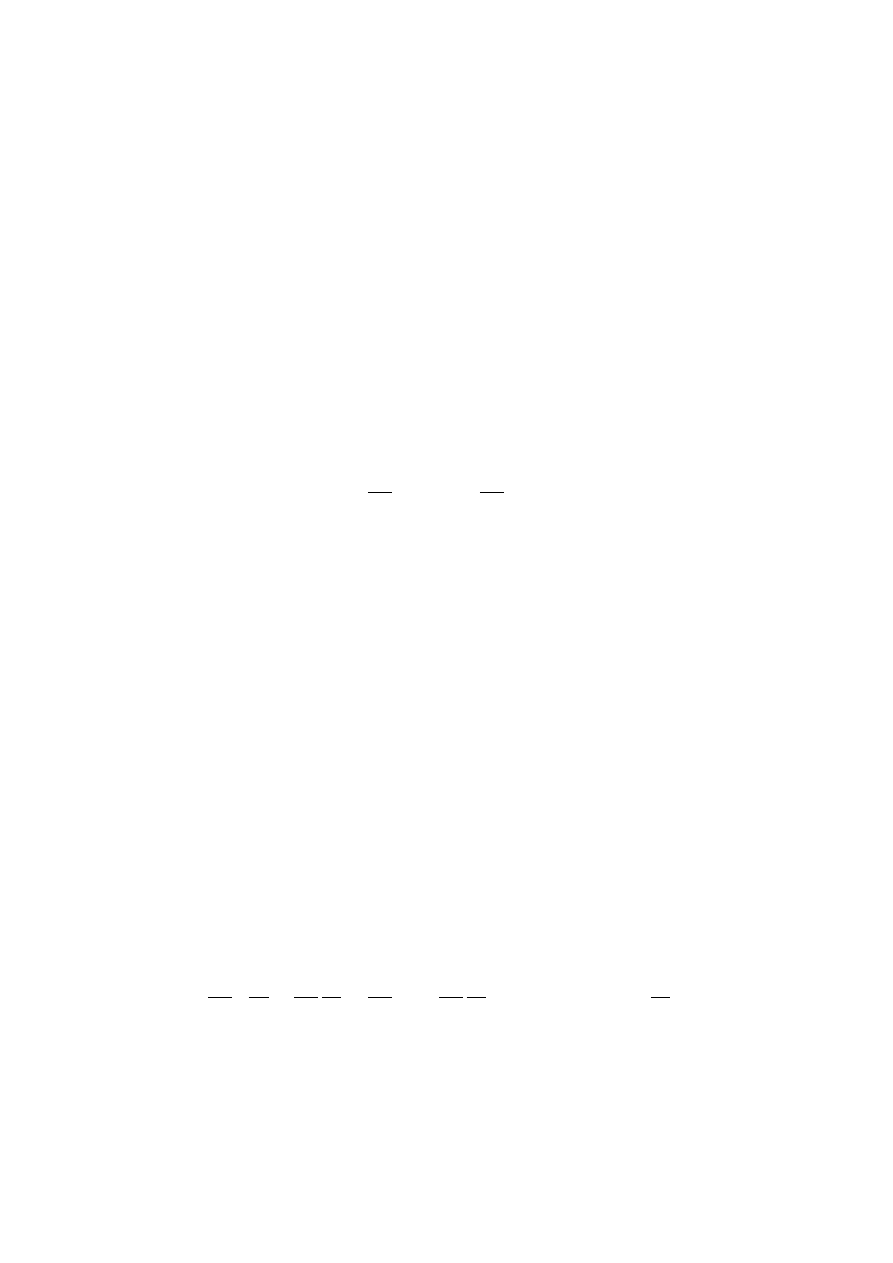

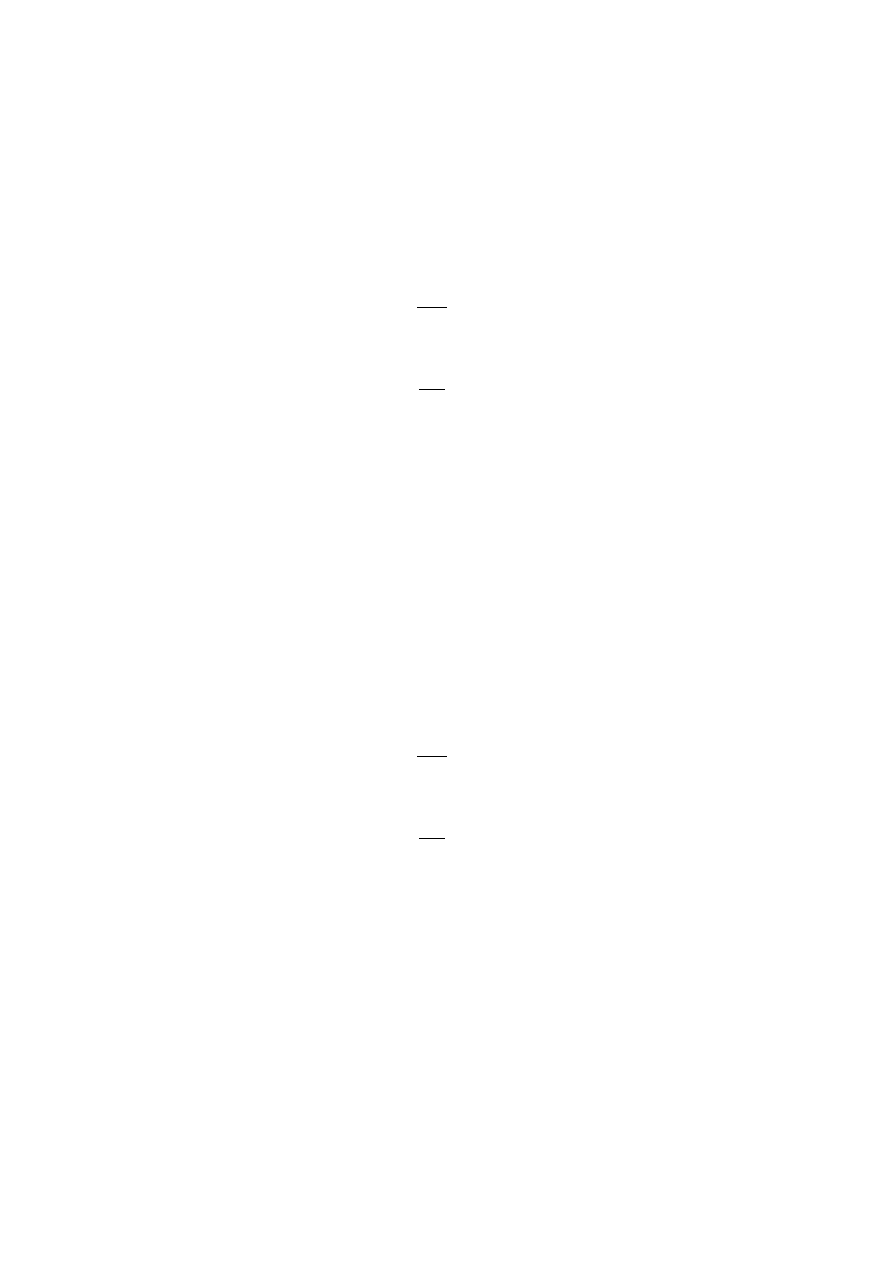

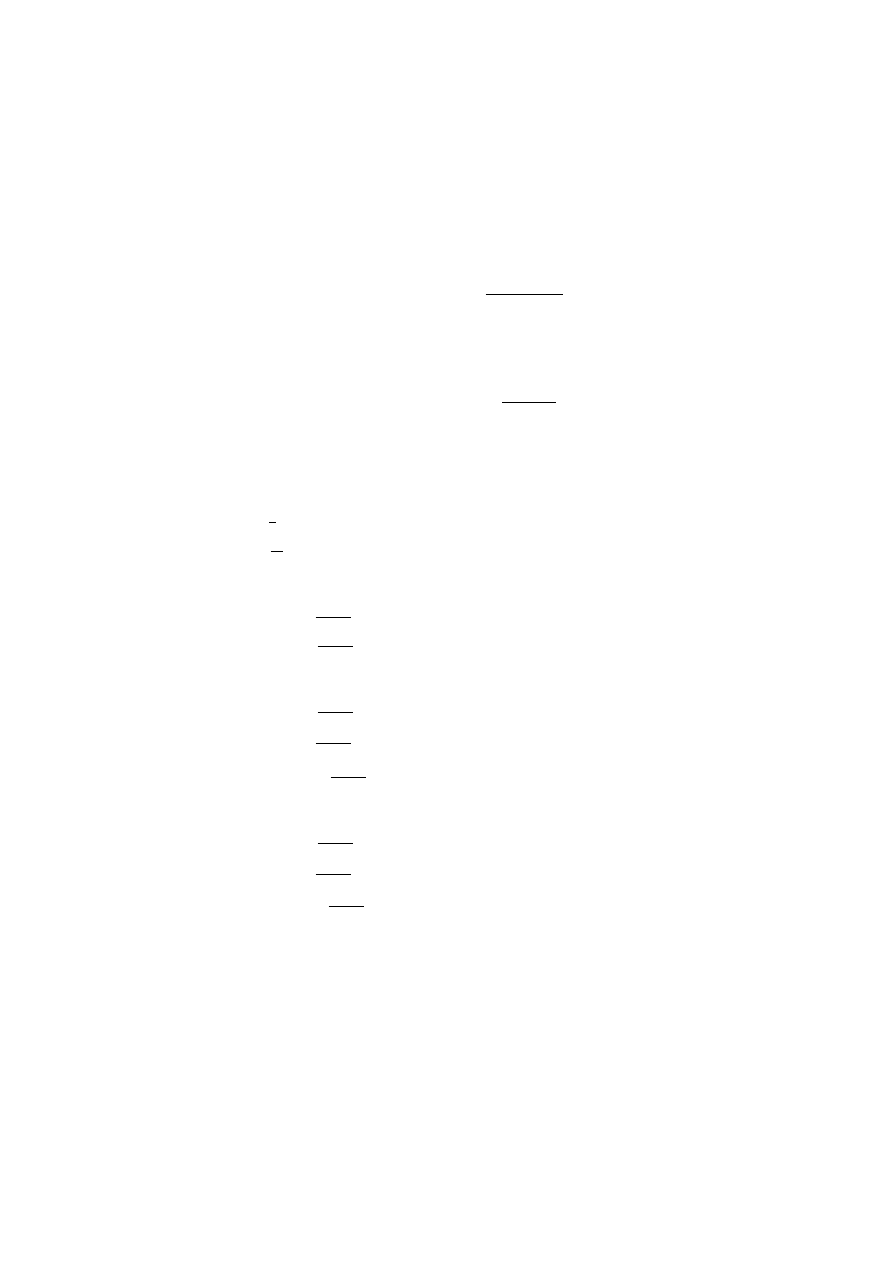

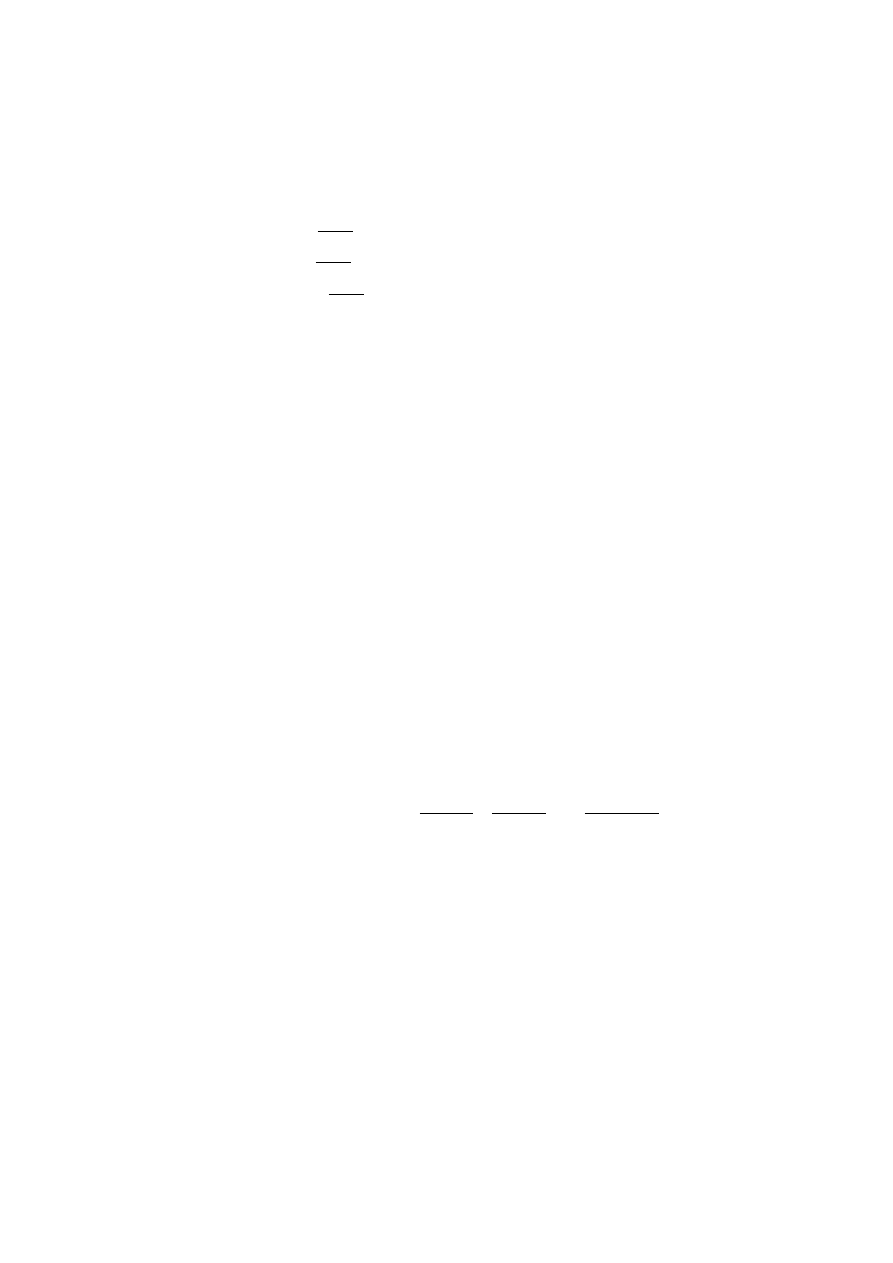

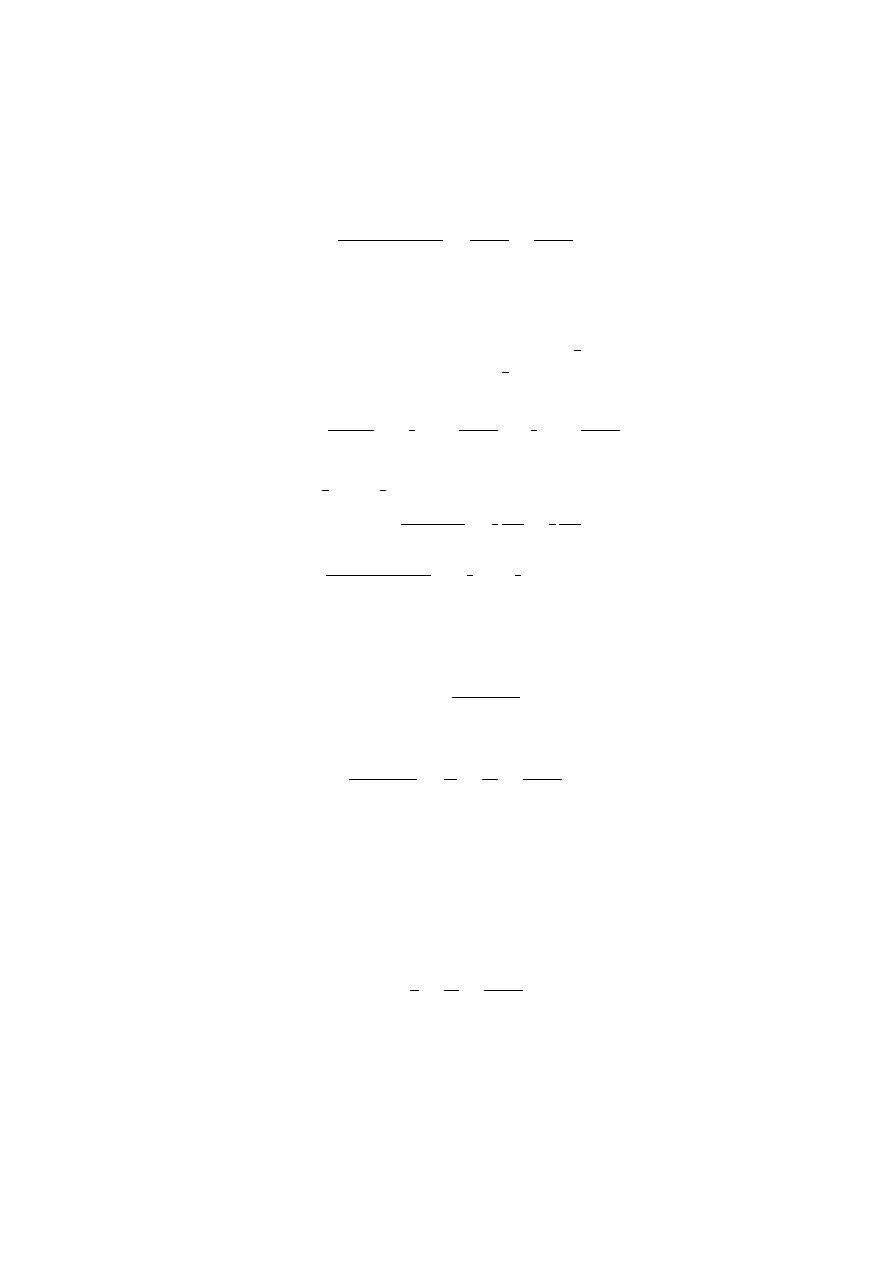

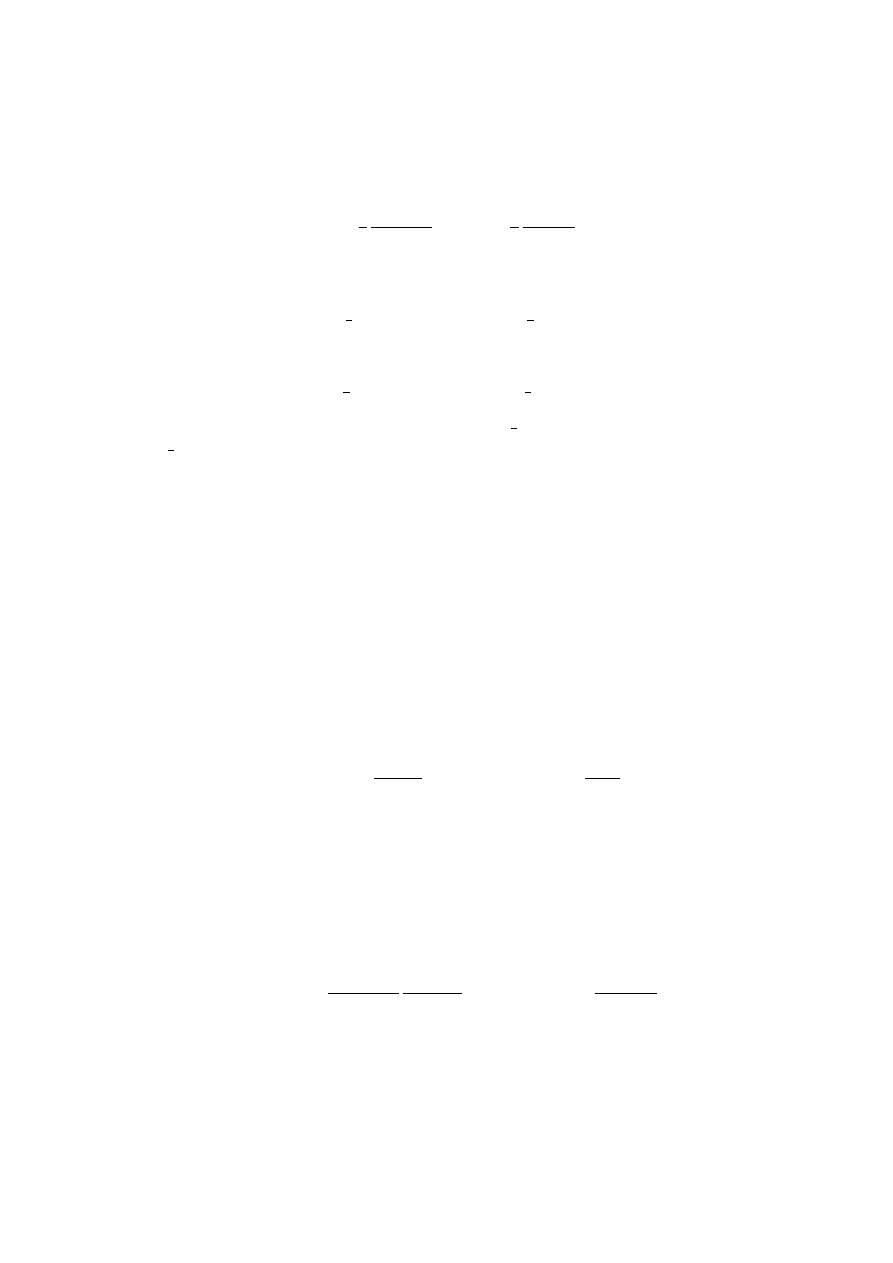

Figure 1.3:

Solution Obtained by Euler’s Method

If we define y

0

k

= F (x

k

, y

k

) and h

k

= x

k

− x

k−1

,

for all appropriate values of the integer k, then

y

k+1

= y

k

+ y

0

k

· h

k

.

(1.22)

This is illustrated in Figure (1.3).

If the points are equally spaced, so that

∆x = x

1

− x

0

= x

2

− x

1

= · · · = x

n

− x

n−1

,

then we simply write h for h

k

, k = 1, 2, . . . , n − 1

and Equation 1.22 becomes

y

k+1

= y

k

+ h y

0

k

k = 0, 1, . . . , n − 1.

One may observe that ∆x is actually an

approximation to the differential dx and

that ∆y := F (x

k

, y

k

) ∆x is

an approximation to dy evaluated at the

point (x

k

, y

k

). From henceforth we will only

24

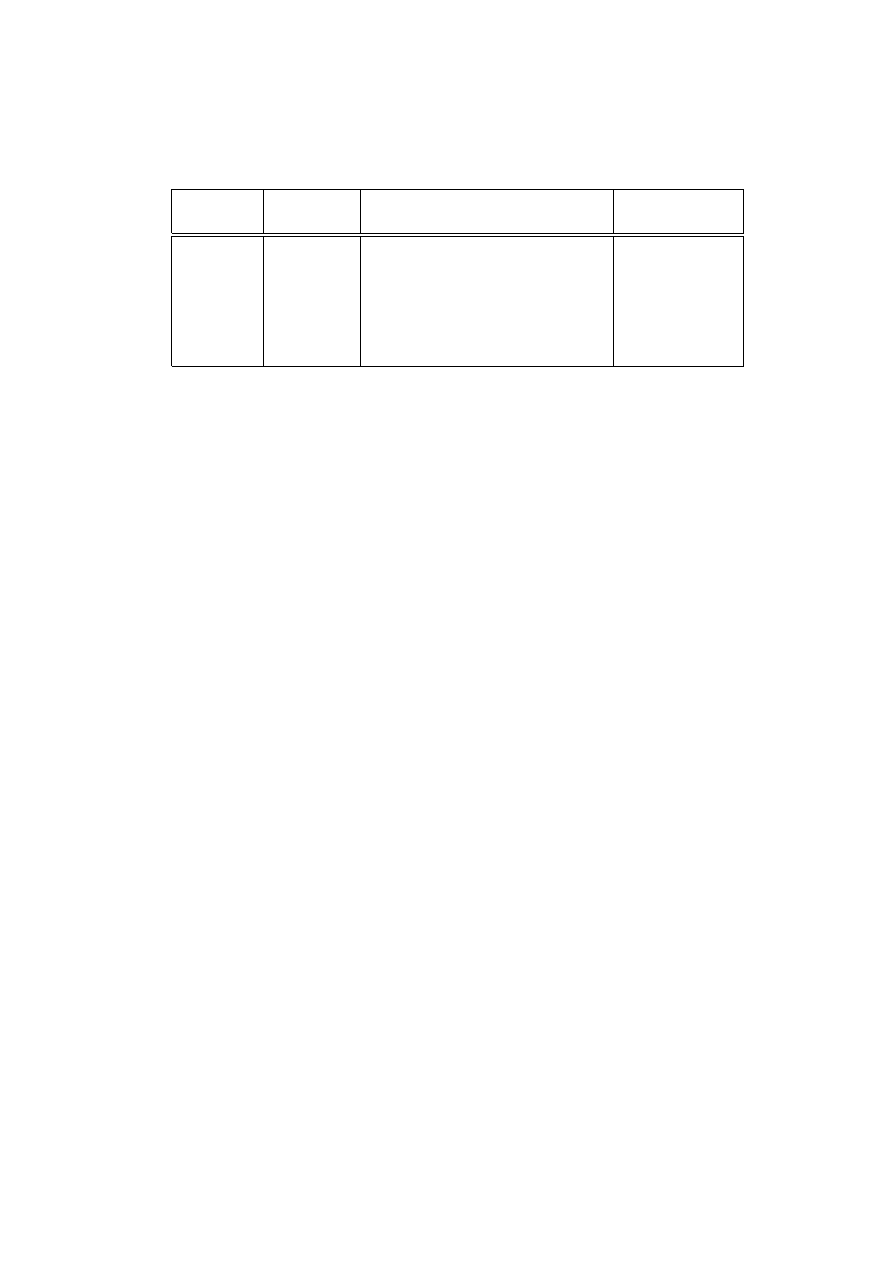

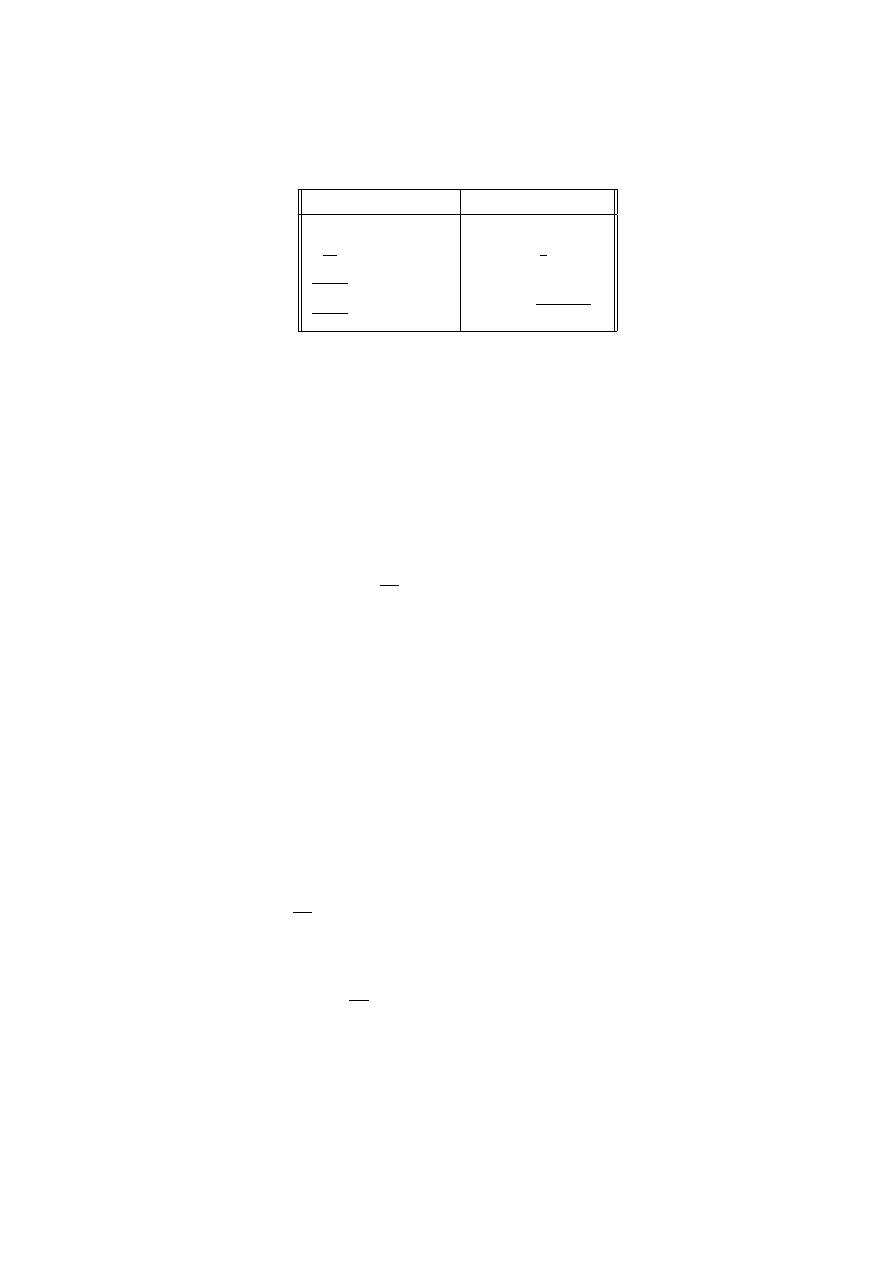

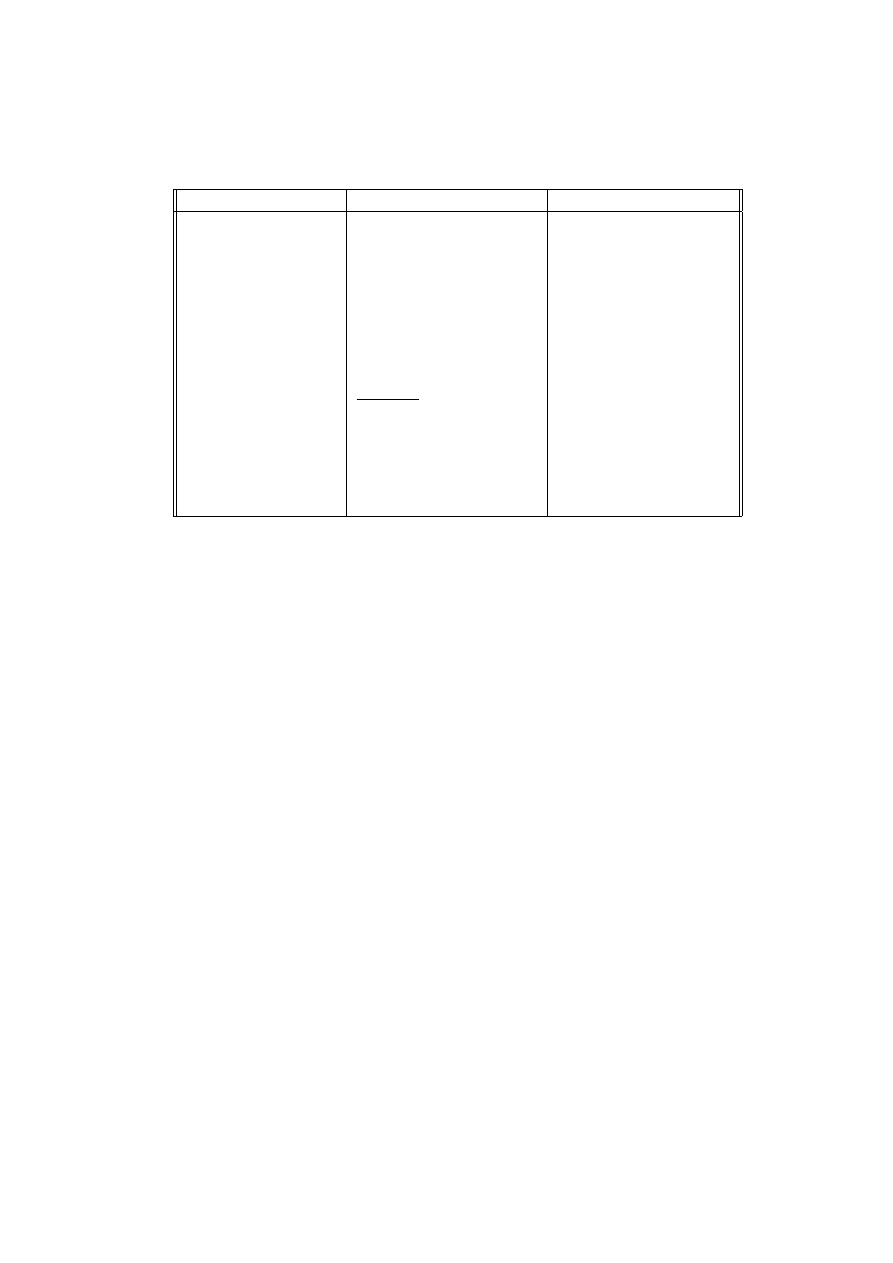

Total

Slope at (x, y)

Increment of y

x

y

F (x, y)

∆y

x

0

y

0

y

0

0

:= F (x

0

, y

0

)

F (x

0

, y

0

) ∆x

x

0

+ ∆x

y

0

+ ∆y

y

0

1

:= F (x

0

+ ∆x, y

0

+ ∆y)

F (x

1

, y

1

) ∆x

x

0

+ 2∆x

y

1

+ ∆y

y

0

2

:= F (x

1

+ ∆x, y

1

+ ∆y)

F (x

2

, y

2

) ∆x

x

0

+ 3∆x

y

2

+ ∆y

y

0

3

:= F (x

2

+ ∆x, y

2

+ ∆y)

· · ·

· · ·

· · ·

· · ·

· · ·

x

0

+ n∆x

y

n−1

+ ∆y

y

0

n

= F (x

n−1

+ ∆x, y

n−1

+ ∆y)

F (x

n

, y

n

) ∆x

Table 1.1:

Euler’s Method Calculations

consider equally spaced points x

k

. Now

look at the table 1.1,

with ∆x kept constant,

for Euler’s method.

The example

y

0

= y

with x

0

= 0, y

0

= 1, ∆x = 0.1, is worked out in

table 1.2.

Of course, the solution to y

0

= y is simply the exponential

function y = e

x

. This function is well behaved and

has is defined for all real numbers. We will compute

the first 5 values (k = 0, . . . , 5) using a BASIC

(Beginners’ All-purpose Symbolic Instruction Code)

program.

25

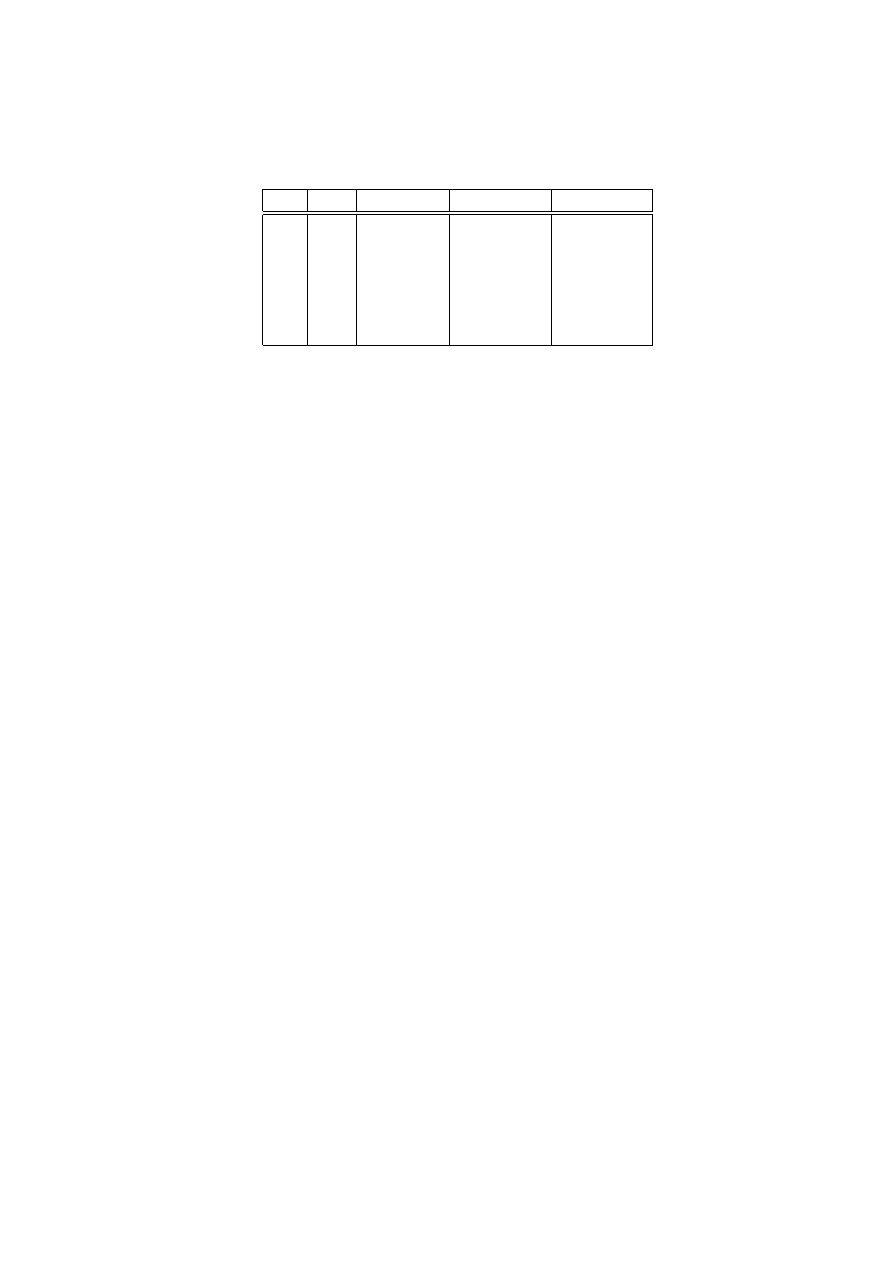

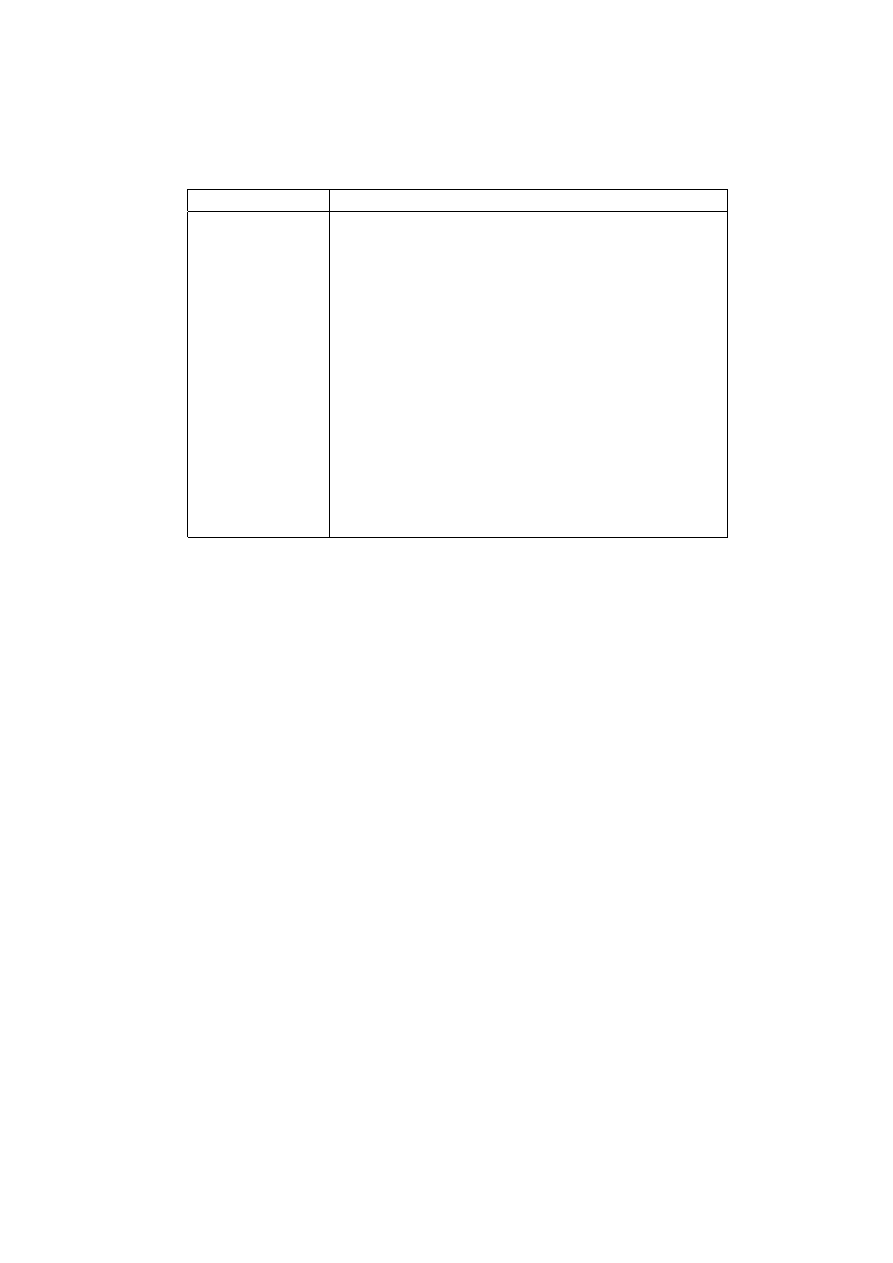

k

x

k

y

k

∆y

e

x

k

0

0

1

0.1

1

1

.1

1.1

0.21

1.105171

2

.2

1.21

0.331

1.221403

3

.3

1.331

0.4641

1.349859

4

.4

1.4641

0.61051

1.491825

5

.5

1.61051

0.771561

1.648721

Table 1.2:

An Approximation to e

x

100 x0 = 0 : y0 = 1

110 h = .1 : n = 5

120 x = x0 : y = y0

130 PRINT x, y, EXP(x)

140 FOR k = 1 TO n

150 yprime = y

160 x = x + h

170 y = y + yprime ∗ h

180 PRINT x,y,EXP(y)

190 NEXT k

200 END

One might observe that the smaller the step

size, that is, the smaller the value of ∆x,

the closer the approximation of Euler’s method is

to the exact solution. We went from x

0

to x

n

by positive increments h := ∆x;

proceeding by negative increments would have

yielded a solution to the left. With modern

computer software, there is little need to

26

apply such techniques as Euler’s method;

however, there is much to be learned about

which technique is best employed for the

computation of a solution to a given differential

equation and which should be avoided. This topic,

the numerical approximation of

solution curves to differential

equations, is part of numerical analysis. The

computer algorithms and techniques found in

commercial software and applied to

various problems require special skills and

knowledge beyond the scope of this introductory

course; however, certain topics in the estimation

of the error in computed versus exact solutions

will be covered in a later chapter. The reader

is encourage to experiment with computer

software which calculates and plots solution

curves to various ordinary differential equations

and systems of ordinary differential equations.

Some of the graphics are truly rad and awesome!

1.6

The equation y

0

= F (x)

The most familiar of all differential equations

are those of the form

y

0

= F (x),

(1.23)

where F (x) is continuous for all x, a ≤ x ≤ b.

Solutions of (1.23) are obtained via

the Fundamental Theorem of Calculus.

Theorem 1 Fundamental Theorem of Calculus

Let F (x) be continuous in the interval a ≤ x ≤ b.

For each real number c

1

, there exists a unique solution

f (x) of (1.23) in the interval [a, b]

such that f (a) := c

1

. The solution is given by the

definite integral

27

f (x) =

Z

x

a

F (t) dt + c

1

.

(1.24)

Letting

R

F (t) dt denote the indefinite integral of F ,

we can denote Equation (1.24) as

y =

Z

F (x) dx + c

1

a ≤ x ≤ b.

Another form of the above is

y =

Z

x

x

0

F (t) dt + c

1

a ≤ x ≤ b,

(1.25)

where a ≤ x

0

≤ b. In the above equation

the indefinite integral

has been replaced by the definite integral with

limits of integration x

0

, x, and with t

as a “dummy” variable

of integration. Again, it is the Fundamental

Theorem of Calculus that says

d

dx

Z

x

x

0

F (t) dt = F (x),

(1.26)

so that the integral on the right of

Equation (1.26) is indeed an

indefinite integral of F (x).

In applications, it is frequently required

that y := y

0

when x = x

0

. The constant c

1

must be chosen in

Equation (1.25) such that

y

0

=

Z

x

0

x

0

F (u) du + c

1

= 0 + c

1

.

Therefore c

1

:= y

0

and the differential equation becomes

y = y

0

+

Z

x

x

0

F (t) dt

a ≤ x ≤ b.

(1.27)

Just because the indefinite integral

R

F (t) dt

cannot be evaluated in closed form does not mean that

28

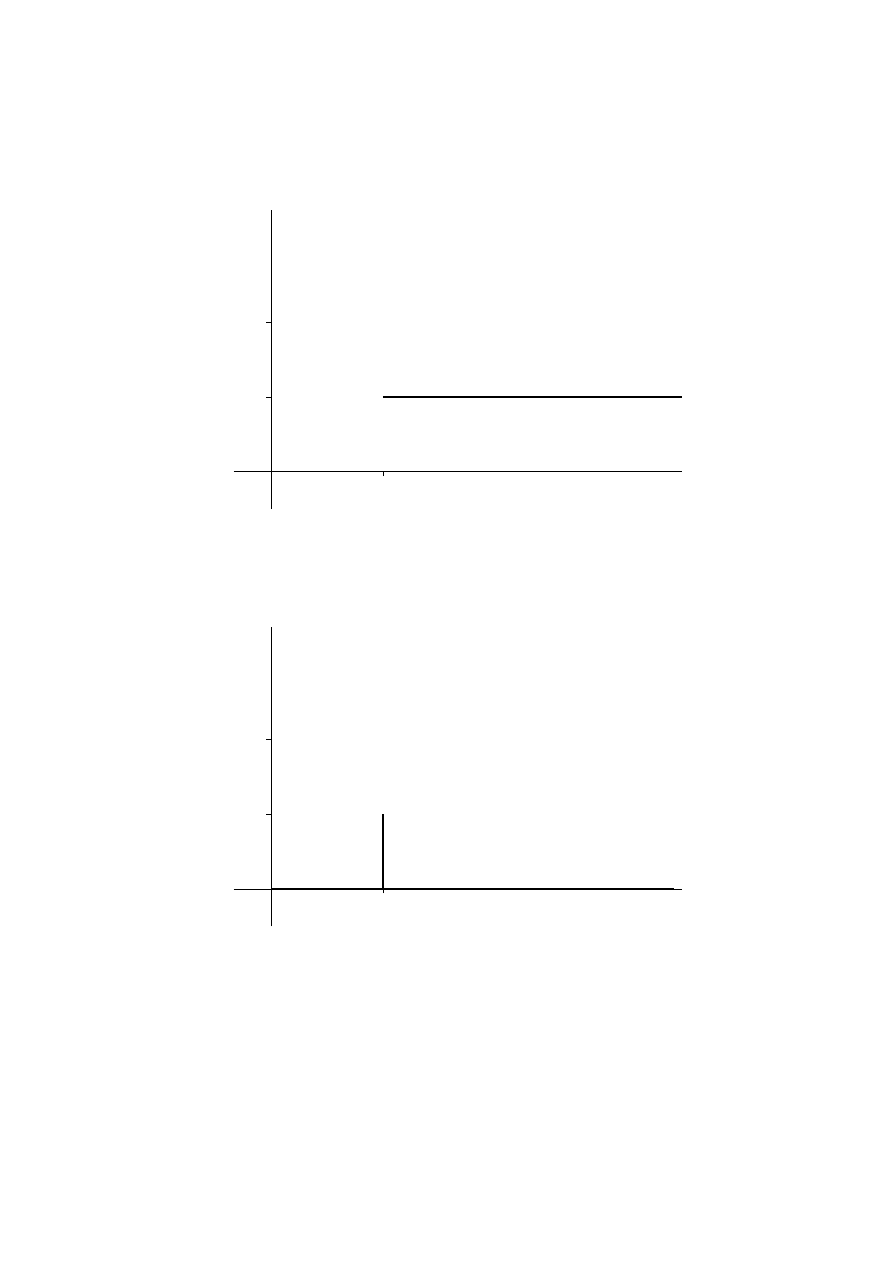

-

6

x

0

x

0

+∆x

x

y

t

∆x

F (x

0

)

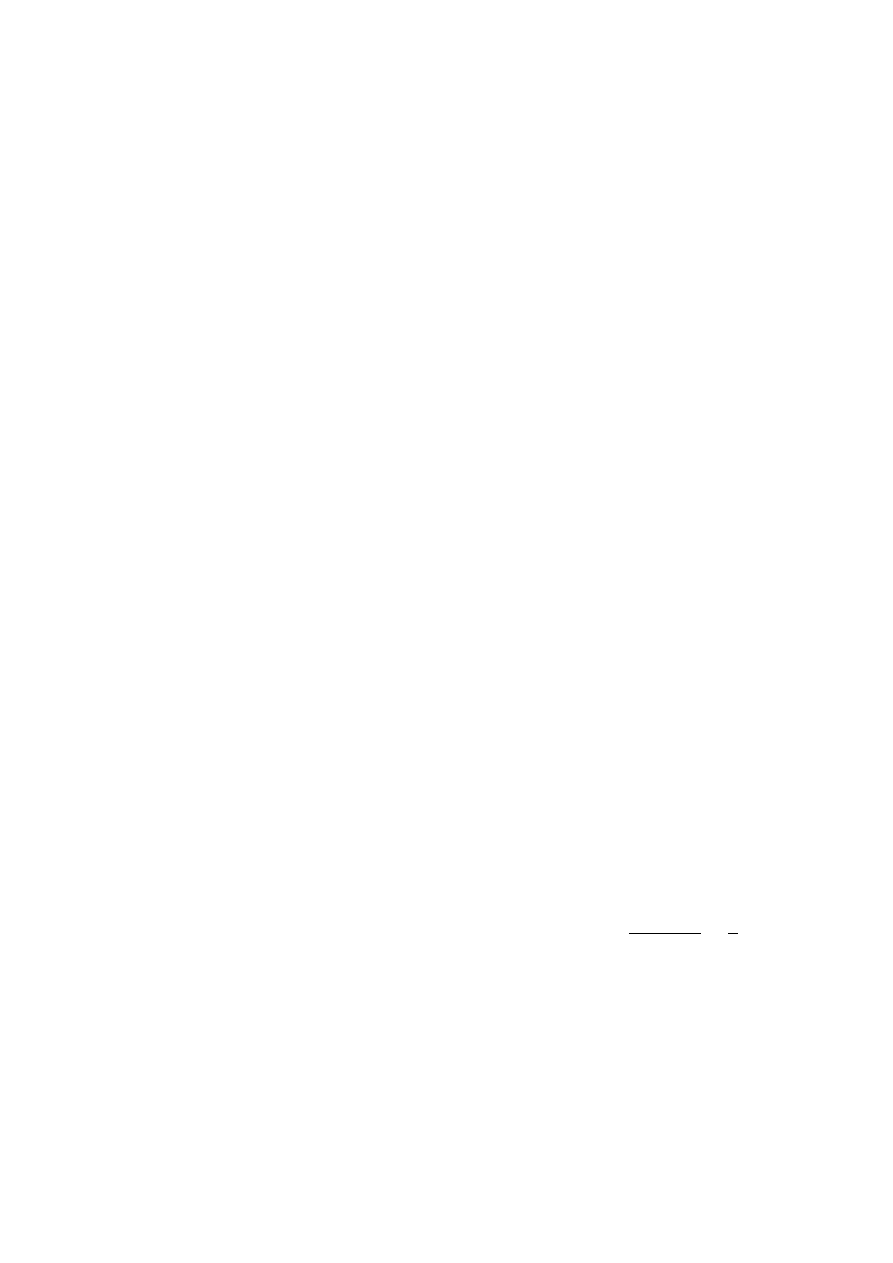

Figure 1.4:

Integration by Simple Differences

it does not have a meaning. In fact, several important

functions in statistics (e.g., the normal distribution)

and physics (e.g., the Fresnel integrals) are expressed in

terms of definite integrals. Equation (1.27)

itself can be used to compute the value

of y for each x. Fix x and evaluate the

definite integral by any of the standard

approximation techniques: the

trapezoidal rule, Simpson’s rule, etc.

The most elementary approximation is given by

taking the sums of

“circumscribed rectangles” (also called an

inner sum) as follows.

Divide the closed interval

[x

0

, x] = { t | x

0

≤ t ≤ x } into n equal parts, each

of length ∆x,

so that x = x

0

+ n∆x.

29

y = y

0

+

Z

x

x

0

F (u) du ≈ y

0

+ F (x

0

) ∆x+

F (x

0

+ ∆x) ∆x + . . . + +F (x

0

+ (n − 1)∆x) ∆x,

(1.28)

as suggested in Figure 1.4.

Notice that when F (x, y) ≡ F (x)

Equation (1.28) and the Euler’s method are

precisely the same. We tabulate values for (x

k

, y

k

),

k = 0, 1, . . . , n as follows.

x

0

y

0

y

0

x

1

:= x

0

+ ∆x

y

1

y

0

+ ∆y = y

0

+ F (x

0

) ∆x

x

2

:= x

0

+ 2∆x

y

2

y

0

+ F (x

0

) ∆x + F (x

0

+ ∆x) ∆x,

..

.

..

.

..

.

x

n

:= x

0

+ n∆x y

n

y

0

+ F (x

0

) ∆x + · · · + F (x

0

+ (n − 1)∆x) ∆x.

The Euler’s method is simply a generalization of a first

approximation, the inner sum, to an integral. In fact,

if one defines an outer sum in a manner similar, it is

possible to classify all integrable functions as those

whose inner and outer sums converge in the limit as

∆x → 0 to a number. In some sense, numerical

solution of a differential equation is a generalization

of numerical integration. The earliest attempts at solving

differential equations were via hard wired electrical

circuits and mechanical devices. These “differential

engines” were analog computers and have been made

obsolete by modern digital computers.

The techniques used to solve single equations using Euler’s

method can also be used to solve systems of simultaneous

first order differential equations. Such problems as

reduction of order and solving simultaneous first order

differential equations are a staple in every

elementary book on ordinary differential equations.

30

Problems

1. Use Euler’s method, with ∆x = 0.01,

to find the value of y when x = 1.5 on the solution curve

of y

0

= −y

2

+ x

2

such that y

0

= 1 when x

0

= 1.

Compare with a solution from a software package.

2. Use Euler’s method, with ∆x = 0.01,

to find the value of y when x = 1.5 on the solution curve

of y

0

= −y

2

+ x such that y

0

= 1 when x

0

= 1.

Compare with a solution from a software package.

3. Use Euler’s method, with ∆x = 0.1,

to find the value of y when x = 1.5 on the solution curve

of y

0

= x + y such that y

0

= 1 when x

0

= 0.

Compare with a solution from a software package.

Solutions

1. Use Euler’s method, with ∆x = 0.01,

to find the value of y when x = 1.5 on the solution curve

of y

0

= −y

2

+ x

2

such that y

0

= 1 when x

0

= 1.

Compare with a solution from a software package.

We start with a BASIC program

100 x0 = 1 : y0 = 1

110 h = .01 : n = 50

120 x = x0 : y = y0

130 PRINT x,y

140 yprime = x − y ∗ y

100 x = x + h

31

100 y = y + yprime ∗ h

100 NEXT k

100 END

The BASIC program yields y(1.5) = 1.210649

The Mathematical Software Program yields y(1.5) = 1.2129

2. Use Euler’s method, with ∆x = 0.01,

to find the value of y when x = 1.5 on the solution curve

of y

0

= −y

2

+ x such that y

0

= 1 when x

0

= 1.

Compare with a solution from a mathematical software package.

We start with a BASIC program

100 x0 = 1 : y0 = 1

110 h = .01 : n = 50

120 x = x0 : y = y0

130 PRINT x,y

140 yprime = x ∗ x − y ∗ y

100 x = x + h

100 y = y + yprime ∗ h

100 NEXT k

100 END

The BASIC program yields y(1.5) = 1.09031

The Mathematical Software Program yields y(1.5) = 1.09119

3. Use Euler’s method, with ∆x = 0.1,

to find the value of y when x = 1.5 on the solution curve

of y

0

= x + y such that y

0

= 1 when x

0

= 0.

Compare with a solution from a software package.

We start with a BASIC program

100 x0 = 0 : y0 = 1

110 h = .1 : n = 10

32

120 x = x0 : y = y0

130 PRINT x,y

140 yprime = x + y

100 x = x + h

100 y = y + yprime ∗ h

100 NEXT k

100 END

The BASIC program yields y(1.5) = 3.187485

The Mathematical Software Program yields y(1.5) = 3.43658

From the professional software, we have

{{1.}, {1.11034}, {1.24281}, {1.39972},

{1.58365}, {1.79745}, {2.04424}, {2.32751},

{2.65109}, {3.01922}, {3.43658}}

1.7

Existence theorems

Not every differential equation has a solution.

Look no further than the differential equation

(y

0

)

2

+ y

2

+ 1 = 0.

If there exists a number x for which y(x)

is defined then y

0

(x) cannot be a real number.

However, from what was just done in Section

1.6 one might guess

that the differential equation

dy

dx

= F (x, y)

(1.29)

33

has is a unique solution y = f (x) which passes through a

given initial point (x

0

, y

0

).

Under appropriate assumptions concerning the function

F (x, y), existence of such a solution can indeed be

guaranteed. For the equation of the nth order,

y

(n)

= F

x, y, y

0

, . . . , y

(n−1)

,

(1.30)

we expect that there will be a unique solution satisfying

initial conditions:

y(x

0

) := y

0

,

y

0

(x

0

) := y

0

0

,

. . . ,

y

(n−1)

(x

0

) := y

(n−1)

0

(1.31)

where each of x

0

, y

0

, . . . , y

(n−1)

n

is a real number.

The following fundamental theorem justifies these

expectations.

Theorem 2 Existence Theorem.

Let F

x, y, y

0

, . . . , y

(n−1)

be a

function of the variables x, y, y

0

, . . . , y

(n−1)

,

defined and continuous when

|x − x

0

| < h,

|y − y

0

| < h,

. . . ,

|y

(n−1)

− y

(n−1)

0

| < h,

and having continuous first partial derivatives with

respect to y, y

0

, . . ., y

(n−1)

. Then there

exists a solution y = f (x) of the differential equation

(1.30), defined in some interval |x − x

0

| < h

1

,

and satisfying the initial conditions (1.31).

Furthermore, the solution is unique; that is, if

y = g(x) is a second solution satisfying (1.31),

then f (x) ≡ g(x) whenever both functions are defined.

A proof of this theorem is long and technical. It is

included here because several of the concepts that appear

in the proof are frequently referred to in physics,

engineering, and computer science applications. The student

34

should at least read through the proof to become acquainted

with the terminology and with the thread of the argument.

In a course on advanced calculus, proofs such as this

are common place and require memorization.

Proof:

We will prove this theorem for first order equations,

that is, for n = 1,

y

0

= F (x, y).

Let M denote the maximum of F (x, y) for

|x − x

0

| ≤ h/2 and |y − y

0

| ≤ h/2.

We choose a smaller closed rectangle inside

the original open rectangle to ensure that

F (x, y) attains its maximum there. The closed

rectangle may be denoted as the set

R = { (x, y) | x

0

− h/2 ≤ x ≤ x

0

+ h/2, y

0

− h/2 ≤ y ≤ y

0

+ h/2 }.

(We have to have a closed and bounded set.

A function like g(x) = 1/x is continuous

at every point in the open interval (0, 1)

but it is unbounded!) Let

h

1

=

h

2(M + 1)

.

The fact that F (x, y) has a continuous first

partial derivative with respect to y in R

says that it satisfies a Lipschitz condition

with respect to y in R. This condition is

a technical piece of mathematics; however, it is

found in every advanced textbook in science and

engineering and no course in

differential equations would be complete without

mentioning it. One should be encouraged by

the fact that there are several cases in the

study of shock waves and denotation where the

35

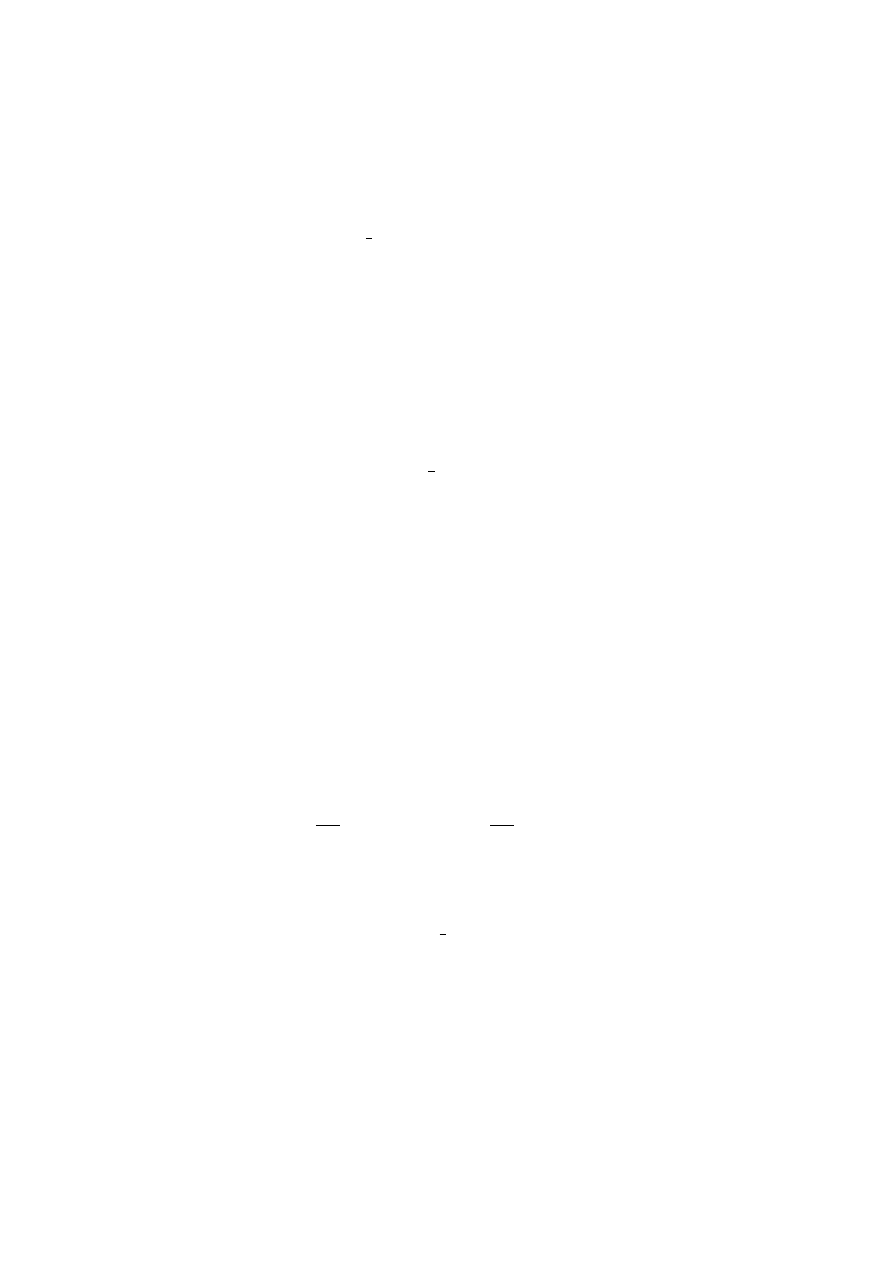

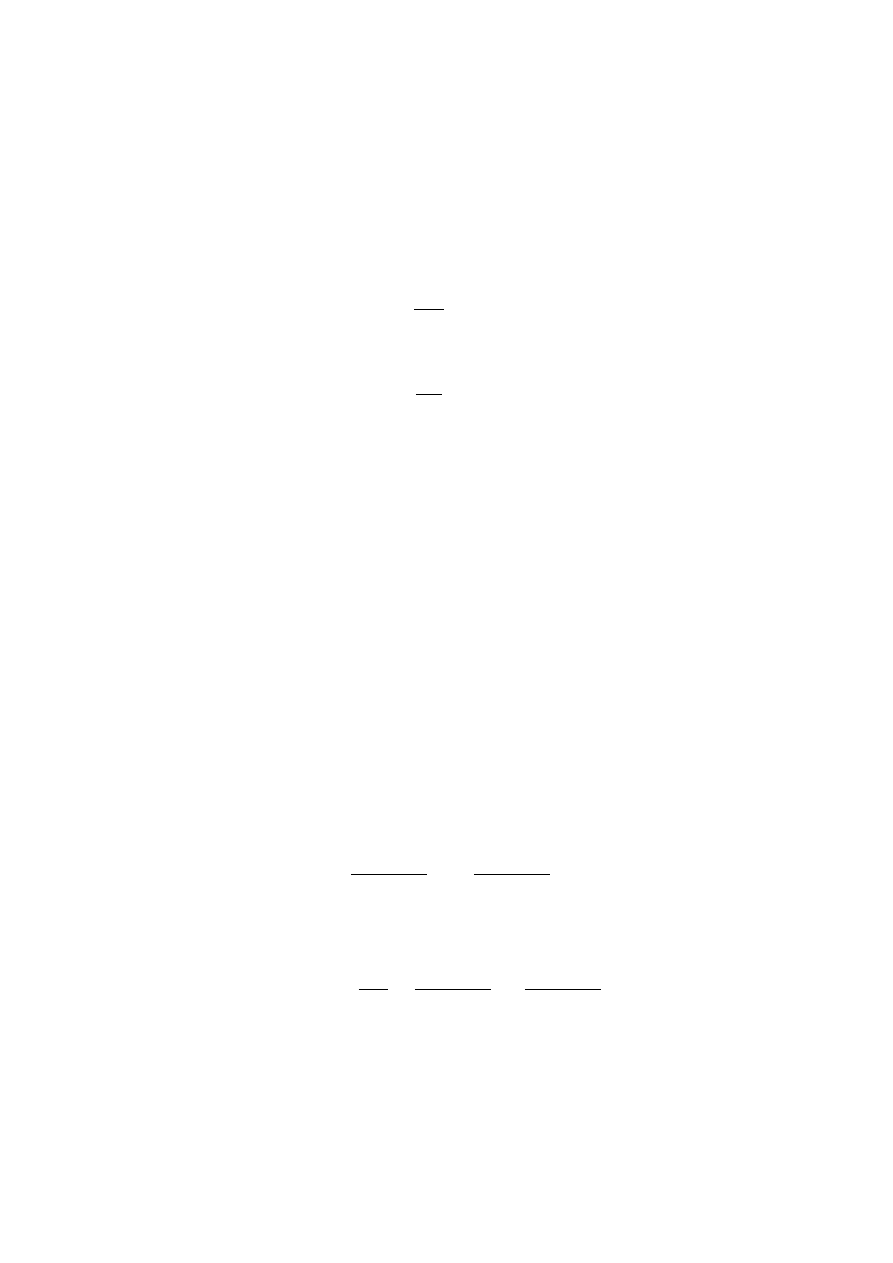

-

6

y

x

y

0

y

0

−h/2

y

0

+h/2

x

0

−h

1

x

0

+h

1

x

0

(x

0

, y

0

)

•

?

6

2h

Figure 1.5:

The Closed Region R

function F (x, y) does not have

a partial derivative with respect to y but

does satisfy the Lipschitz condition. By a Lipschitz

condition, we mean that

there exists a positive number K such that

|y(x) − y

0

| ≤ K |x − x

0

|

for each x ∈ [x

0

− h

1

, x

0

+ h

1

].

We will demonstrate in a solved problem that

if F

y

(x, y) ≡ ∂F (x, y)/∂y

is continuous in R, then F satisfies a Lipschitz

condition in F with respect to y.

Solving an initial value problem is the same as

finding a continuous solution to the integral

equation

y(x) = y

0

+

Z

x

x

0

F (t, y(t)) dt

for

|x − x

0

| ≤ h

1

.

36

The equivalence of the integral equation and the

differential equation follows from the Fundamental

Theorem of Calculus.

For convenience, let x

0

≤ x ≤ x

0

+ h

1

.

A symmetric

proof will hold for the case x

0

− h

1

≤ x ≤ x

0

.

We apply Picard’s method (of successive approximations).

This method is important for historical purposes and also

it is a must for any student who claims

to have completed a course in differential equations.

But it is not something that needs to be dwelt on. Just

remember that prior to the advent of computers, mathematicians

had to rely solely on such methods to solve differential

equations numerically.

y

0

(x) := y

0

y

1

(x) := y

0

+

Z

x

x

0

F (t, y

0

(t)) dt

. . .

y

n

(x) = y

0

+

Z

x

x

0

F (t, y

n−1

(t)) dt

for n = 1, 2, . . .. To prove existence,

we have to show two things: (1)

the sequence of functions {y

n

}

∞

n=0

converges pointwise to a limit function, y(x);

and (2) the pointwise limit, y(x), is continuous on

the interval x

0

− h

1

≤ x ≤ x

0

+ h

1

.

If |y

n−1

(x) − y

0

| ≤ h/2 then

|y

n

(x) − y

0

| ≤

Z

x

x

0

|F (t, y

n−1

(t))| dt ≤ (x − x

0

)M ≤ h

1

M ≤

hM

2(M + 1)

≤

h

2

.

It follows by induction that |y

n

(x) − y

0

| ≤ h/2 for

each positive integer n. From this, we observe that

37

the Lipschitz condition applies to F (x, y

n

(x)).

Going back to the operational definition of F

y

and applying the mean value theorem for derivatives,

we notice that

|F (x, y

n

(x)) − F (x, y

0

(x))| ≤ M · |y − y

0

|

In order to show that the function sequence

{y

n

(x)}

∞

n=0

, x

0

≤ x ≤ x

0

+ h

1

,

converges uniformly to a continuous function y(x) such

that

y(x) = y

0

+

Z

x

x

0

F (t, y(t)) dt

for

|x − x

0

| ≤ h

1

,

we consider the infinite series

y

0

(x) + [y

1

(x) − y

0

(x)] + [y

2

(x) − y

1

(x)] + . . . + [y

n

(x) − y

n−1

(x)] + . . . .

y

n

(x) is the nth partial sum since, it

“telescopes” as a finite series.

|y

1

(x) − y

0

(x)| ≤ M |x − x

0

| .

|y

2

(x) − y

1

(x)| ≤

Z

x

x

0

|F (t, y

1

(t)) − F (t, y

0

(t))| dt,

applying the Lipschitz condition for F (all the y

n

(x)

are in the rectangle R), one gets

|y

2

(x) − y

1

(x)| ≤ M

Z

x

x

0

|y

1

(t) − y

0

(t)| dt ≤ M · M

Z

x

x

0

(t − x

0

) dt

≤

M

2

(x − x

0

)

2

2!

≤

M

2

h

2

1

2!

.

By induction, one obtains

|y

n

− y

n−1

| ≤

M

n

n!

h

n

1

.

The infinite series

38

∞

X

n=1

M

n

n!

h

n

1

converges for all h

1

≥ 0. From the fact that

e

x

=

P

∞

n=0

x

n

/n!, we can even compute

the limit. Now, to define y by passing to the

limit of the infinite series of approximations.

y(x) = lim

n→∞

y

n

(x)

for all

x

0

− h

1

≤ x ≤ x

0

+ h

1

= y

0

+ lim

n→∞

Z

x

x

0

F (t, y

n−1

(t)) dt

= y

0

+

Z

x

x

0

lim

n→∞

F (t, y

n−1

(t)) dt

= y

0

+

Z

x

x

0

F (t, y(t)) dt.

The fact that F (x, y) is continuous and that {y

n

(x)}

is a uniformly convergent sequence justifies interchanging

the limit and integral. This shows that there exists a

solution. (∃ y such that y

0

= F (x, y).)

To prove uniqueness of the solution y(x), we assume that

w(x) is a solution of y

0

= F (x, y) for x

0

≤ x ≤ h

1

.

It must be true that

w(x) = y

0

+

Z

x

x

0

F (t, w(t)) dt

and

|y

n

(x) − w(x)| ≤

Z

x

x

0

|F (t, y

n−1

(t)) − F (t, w(t))| dt

As before, we apply induction to show that

|y

n

(x) − w(x)| ≤

M

n+1

h

n

1

(n + 1)!

x

0

≤ x ≤ x

0

+ h

1

.

39

Passing to the limit as n → +∞,

|y(x) − w(x)| ≤ 0.

Therefore, y(x) ≡ w(x) for each

x ∈ [x

0

, x

0

+ h

1

]. This proves

that the solution is unique.

♦

Example.

Consider the differential equation

y

00

= e

2x

.

(1.32)

Integrate twice to obtain

y

0

=

1

2

e

2x

+ c

1

y =

1

4

e

2x

+ c

1

x + c

2

.

(1.33)

Suppose that each of y

0

, y

0

0

are real

numbers, so that the initial values are

x

0

:= 0

y(0) := y

0

,

and

y

0

(0) := y

0

0

.

From the above initial conditions, it is easy

to compute the definite values for the

arbitrary constants, c

1

, c

2

:

c

1

= y

0

0

−

1

2

,

c

2

= y

0

−

1

4

.

Thus, the solution to the initial value

problem is

y =

1

4

e

2x

+

y

0

0

−

1

2

+

y

0

−

1

4

.

(1.34)

For the initial values problem of

F

x, y

0

, . . . , y

(n)

= 0, we require that

x

0

:= x

0

, y(0) := y

0

, y

0

(0) := y

0

0

,

. . ., y

(n)

(0) := y

(n)

0

,

where each of x

0

, y

0

, y

0

0

, . . .,

40

y

(n)

0

is a real number. This will

determine, in general, definite values for

each of the n arbitrary constants,

c

1

, c

2

, . . ., c

n

. Recall from

algebra that the equation of a straight line,

y = mx + b (m is the slope and b is the

so-called y-intercept), can be determined

in several ways: from two points, (x

0

, y

0

), (x

1

, y

1

), (where x

0

6= x

1

); from

one

point and the slope, (x

0

, y

0

) and the real

number m; by the slope-intercept method;

etc. Likewise the n arbitrary constants

arising from the solution of an nth order

ordinary differential equation can be

determined by other means than by initial

values. One method of determining the constants

relies on data from more than one value of the independent

variable, x. These are called boundary

conditions as opposed to initial conditions.

These are called boundary conditions because they

arose in physical applications of thermodynamics

and vibrating strings where measurements could only

be done on the edges, or boundaries, of the object

being studied.

As an example of the use of boundary conditions,

consider Equation (1.33) and require

that

y(0) = y

0

and

y(1) = y

1

.

(1.35)

Solving for c

1

, c

2

yields

c

2

= y

1

−

1

4

,

c

1

= y

2

− y

1

+

1

4

1 − e

2

.

From the above application of boundary values, one

might be led to assume that for n arbitrary

41

constants one need only apply n boundary values

to solve the problem. This is not true. Take, for

instance, the differential equation

y

00

= −y + 2 cos x,

(1.36)

whose general solution is

y = c

1

cos x + c

2

sin x + x sin x.

If we try to apply the boundary conditions

to Equation (1.36)

y(0) = 0

and

y(π) = 1

we have a problem. The arbitrary constant

c

1

must be equal to 0 and to

1. Clearly additional conditions

must be applied for boundary value problems

to make sense, that is, to be consistent. Indeed,

this is an old problem.

The theorems for boundary value problems

are complicated. Some of the more elementary

boundary value problems will be dealt with in a later

chapter.

1.8

Systems of Equations

There are applications where two or more functions share

a common independent variable. In a typical problem, one might

encounter a system of equations as follows

y

0

(x) = y(x) + z(x)

z

0

(x) = 2y(x),

(1.37)

where each of y and z is a function of x alone.

The solution to the above system of equations is

y(x) = c

1

e

2x

+ c

2

e

−x

z(x) = c

1

e

2x

− 2c

2

e

−x

,

42

as can be verified by differentiation (with respect

to x) and substitution. In later chapters we will

learn routine methods for solving such equations.

This is similar to situations encountered in algebra,

and it won’t be surprising that many of the techniques

from algebra will carry over.

One particularly important feature of systems of differential

equations is its application to a single ordinary

differential equation of order two or higher.

One can reduce the order of a second order equation

y

00

= ˜

F (x, y, y

0

) to two simultaneous equations

by the following substitution:

w :=

dy

dx

,

dw

dx

:= ˜

F (x, y, w).

The above is a special case of a more general concept called

the method of reduction of order. It will

also be considered in more detail in a later chapter.

There is also one instance where an equation of higher

degree can be solved by inspection.

Consider the Clairaut equation

y = xy

0

+ G(y

0

)

where G(·) is an arbitrary function. A solution

is found immediately to be a one-parameter family or

curves

y = c

1

x + G(c

1

).

We will state an analog to the existence theorem in

Section 1.7

for systems of n first order equations.

Let

dy

1

dx

= F

1

(x, y

1

, y

2

, . . . , y

n

),

dy

2

dx

= F

2

(x, y

1

, y

2

, . . . , y

n

),

(1.38)

43

..

.

..

.

dy

n

dx

= F

n

(x, y

1

, y

2

, . . . , y

n

),

be n simultaneous equations in the n

unknown functions y

1

, y

2

, . . . , y

n

of x.

Stating the theorem for two unknowns, y, z,

is sufficient. It is clear how the theorem

will generalize for n unknown functions.

dy

dx

= F (x, y, z),

dz

dx

= G(x, y, z),

(1.39)

Theorem 3 Suppose R is a rectangular region.

If each of functions F and G is a continuous

function in R and if h > 0 such that

each has a continuous first

partial derivative, F

y

, F

z

, G

y

, G

z

,

with respect to y and z for |x − x

0

| < h,

|y − y

0

| < h, |z − z

0

| < h, then

there exists a h

1

> 0 and

there exists a unique solution

y = f (x),

z = g(x),

|x − x

0

| < h

1

,

(1.40)

which contains the point

(x

0

, y

0

, z

0

).

A proof of this theorem can be found

in many advanced textbooks on ordinary

differential equations.

(See [15] in the bibliography.)

The previous theorem, Theorem 2,

for one unknown variable,

is a special case of this theorem,

because Equation (1.30)

is simply Equation (1.38)

44

with n = 1.

Higher order systems are also possible, such as:

d

2

x

dt

2

= F

1

t, x, y,

dx

dt

,

dy

dt

!

,

d

2

y

dt

2

= F

2

t, x, y,

dx

dt

,

dy

dt

!

.

(1.41)

Recall Newton’s law (force = mass × acceleration).

When this formula is written for a system of particles,

second order equations (1.41) occur.

When x, y are each functions of a parameter t (time),

one typically denotes x

0

(t), y

0

(t) as ˙x, ˙

y

and x

00

(t), y

00

(t) as ¨

x, ¨

y.

There exists a unique

solution for (1.41) satisfying

t = t

0

,

x (t

0

) := x

0

,

y (t

0

) := y

0

,

˙x (t

0

) := ˙x

0

,

˙

y (t

0

) := ˙

y

0

,

(1.42)

where each of t

0

, x

0

, ˙x

0

, y

0

,

and ˙

y

0

is a real number.

For a particle moving along a constrained path and

subject to known forces, one can determine the

position and velocity at anytime t ≥ t

0

given

the initial position x

0

, y

0

and the initial

velocity ˙x

0

, ˙

y

0

.

Electrical circuit obey similar laws.

1.9

The General Solution

It is important from both a theoretical and a practical

perspective to derive one formula for a given differential

equation which contains all possible solutions. Such a

formula is called the general solution

of the differential equation. From the existence theorem,

found in Section 1.7, we can be

assured that, under very general conditions, solutions

do exist. Notice that y = c

1

e

3x

+ c

2

e

−3x

is the

45

general solution to y

00

− 9y = 0. The fact that y = c

1

e

3x

+ c

2

e

−3x

is a

solution is readily verified

by successive differentiation. The fact that it is

all the solutions (two, in fact, added together

or superimposed ) can be determined

by calculation. We take the most general set of

initial conditions, namely, for x := x

0

,

we set y(x

0

) := y

0

, t

0

(x

0

) := y

0

0

, for any

real number set { x

0

, y

0

, y

0

0

}. Without

loss of generality, let x

0

:= 0.

c

1

+ c

2

= y

0

,

3c

1

− 3c

2

= y

0

0

,

c

1

= y

0

− c

2

,

3y

0

− 3c

2

− 3c

2

= y

0

0

,

6c

2

= 3y

0

− y

0

0

,

c

2

=

1

2

y

0

−

1

6

y

0

0

,

and c

1

=

1

2

y

0

+

1

6

y

0

0

. c

1

, c

2

are uniquely determined for each choice of real

numbers y

0

, y

0

0

. Therefore,

y = c

1

e

3x

+ c

2

e

−3x

is the general solution of

y

00

− 9y = 0.

To verify that y = f (x; c

1

, c

2

) is a general

solution for the second order differential equation

y

00

= ˜

F (x, y, y

0

),

(1.43)

one has to take successive derivatives and substitute

them into Equation (1.43), ensuring

that it is an identity, and one has to ensure

that for every real number set { x

0

, y

0

, y

0

0

}

for which ˜

F has continuous first partial

derivatives there exist numbers c

1

, c

2

such that

y

0

= f (x

0

, c

1

, c

2

)

and

y

0

0

= f

x

(x

0

, c

1

, c

2

) .

The same procedure applies to systems of equations

46

as well as to higher order differential equations.

The existence theorem cannot be weakened in its

assumption on the continuity of F and its partial

derivatives.