7136814855

UN]VERS)DADDE

CostaRica

Image Processing and Computer Vision Research Laboratory

Field Tests on Fiat Ground of an Intensity-Difference Based Monocular Visual Odometry Algorithm for Planetary Rovers

School of Elcctrical Engineering, Dcpt. of Electronic and Telecommunications

•••

|

mean |

standard deviation |

min |

max | |

|

Observation points per image |

15906 |

67.74 |

15775 |

15999 |

|

Itcrations per image |

14.88 |

L89 |

12.33 |

19.09 |

|

Processing time (in seconds) |

0.06 |

0.006 |

0.05 |

0.08 |

|

per image | ||||

|

Absolute ^^position en ci |

0.9% |

0.45% |

0.31% |

2.12% |

Table 1: Suiuuury of experimental results.

Figurę 1: Clcaipath Robotics’'* Husky A200 v rovcr platform and Trimblc * S3 robotic total station uscd for cxperimcntal yalidation.

Abstract

In this contrihution. the experimental results of testing a monocular visual odometry algorithm in a real rovcr platform ovcr flat terrain for localization in outdoor sunlit conditions are presented. The algorithm compules the three-dimensional (3D) position of the rover by integrating its motion over limę. The motion is dircctly estimated by maximizing a likelihood function that is the natural logarithm of the conditional probability of intensity differences measured at different observation points between consecutive images.

It does not require as an intermediate step to dctcrminc the optical flow or establish correspondences. The images are captured by a monocular video camera that has been mounted on the rovcr looking to one side tilted downwards to the planefs surface. Most of the experiments were conducted under scvere global illumination changcs. Comparisons with ground truth data have shown an average absolutc position error of 0.9% of distance traveled with an avcragc processing timc per image of 0.06 seconds.

Intensity-Difference Based Monocular Yisual Odometry Algorithm

• Alternathe algorithm: It was proposed in 111 as an altemative to the long-established feature based stereo visual odometry algorithms [2. 3.4. 5J.

• Positioning coniputation: The rover‘s 3D position is computcd by integrating the frame to frame rover’s 3D motion AB over time.

• Typc of sensor: The frames are taken by a single vidco camera rigidly attached to the rover (see Fig. 1 and Fig. 2.a).

• Direct motion estimation: The frame to frame rover’s 3D motion AB Ls directly estimated by maxi-mi/ing the likelihood function of intensity difTerences at the N key observation points. without establishing correspondences between fcatures or solving the optical flow as an intermediate step. just directly evaluating the frame to frame intensity differences measured at the N key obsenation points. The key observation points are image points with high linear intensity gradients.

• Compact solution: The rcsulted frame to frame rovcr’s 3D motion estimates havc the following compact form:

AB = (oTo) OtFD (1)

where O is the observation matrix and FD is a vector with the intensity differences measured at the .Y observation points.

• Iterative algorithm: Since the obsenation matrix O rcsulted from scvcral truncated Taylor scries cxpansions (i.e. approximations), the Kq. (1) needs to be applied iteratively to improve the reliabiliiy and accuracy of the estimation.

Problem Statement

• Field tests missing: Despite that in [ 1 ] the above intensity-difference based monocular visual odometry algorithm has been extensivcly tested with synthetic data. an cxpcrimcntal validalion of the algorithm in a real rover platform in outdoor sunlit conditions is still missing.

Main Contrihution

• To provide first field test results: This paperis main contrihution is to provide the results of the first outdoor experiments towards yalidation of the algorithm. which was obtained for now on surfaces of little geometri-cal coniplexity such as flat ground. to help to clarify whether the algorithm really does what is intended to do in real outdoors situalions under scvcre global illumination changcs.

Results

The intensity-difference based monocular visual odometry algorithm has been implemented in the programing languagc C and tested in a Clearpath Robotics Husky A200 rovcr platform (sec Fig. 1). In total 343 cxpcri-ments were carried out over flat paver sidewalks only, under severe global illumination changes due to cumulus clouds passing fast across the sun. Special care was taken to avoid the rover’s own shadow in the scene. During each cxpcrimcni, the rover is commanded to drive on a predefined patii at a constant yelocity of 3 cm/sec over a paver sidewalk. usually a straight segment from 1 to 12 m in length or a eloekwise arc from 45 to 280 degrees with 2.5 m radius. while a single camera with a real time image acquisition system captures images at 15 fps and Stores them in the onboard Computer. T he camera has an image resolution of 640x480 pixel2 and a horizontal field of view of 43 degrees. It is located at 77 cm above the ground looking to the left side of the rover tilted downwards 37 degrees (see Fig. 1 and Fig. 2.a).

Simultaneously, a Trimble® S3 robotic total station (robotic theodolite with a laser rangę sensor) tracks a prism rigidly attached to the rover and measures its 3D position with high precision (< 5 mm) cvery sec-ond. After that. the intensity-difference based monocular visual odometry algorithm is applied to the captured image sequence. Thcn. the prism trajectory is computcd from the rover"s estimated 3D motion. Finally, it is compared w ith the ground truth prism trajectory delivered by the robotic total station.

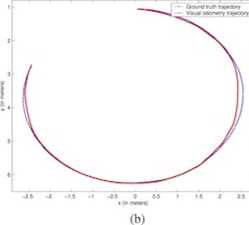

Ali the experiments were performed on an Intel® Corc™ iS at 3.1 GHz with 12.0 GB RAM. In Table 1, the main e.\perimental results arc summarized. The tracking was not lost in any of the experimcntN. As an examplc. Fig. 2.b depicts the visual odometry trajectory' and the robotic total station trajectory for the path number 334 forming an arc segment of the 343 different paths driven by the rover during the experiments.

Figurę 2: (a) Exainple of an image with rcsolulion 640x480 pizd2 captured during expeninent number 334. The camera is located at 77 cm above the ground looking to the left side of the rover tilted downwards 37 degrees. (b) Trajectory obtained by visual odometry (in red) and corresponding ground truth trajectory (in bluc) for the experimcnt number 334. In the esperiment the rover was commanded to drive a eloekwise arc of 280 degrees with radius of 2.5 m om pas er sidewalk.

Conclusions

• Processing timc per image and accuracy so far: After testing the monocular yisual odometry algorithm proposed in [ 1 ] in a real rovcr platform for localization in outdoor sunlit conditions. even under scvcrc global illumination changes. over fiat terrain. along straight lines and gcnlle ares at a constant vclocily. without the presence of shadows. and comparing the results with the corresponding ground truth data. we concluded that the algorithm is able to delivcr the rovcr’s position in average of 0.06 seconds after an image has been captured and with an average absolute position error of 0.9 ' of distance traveled.

• So far so good: Although the cxpcrimcnts so far havc been only on flat ground. thcsc results closely resem-bles those achicvcd by known traditional feature based stereo visual odometry algorithms. whose absolute position errors of distance traveled are w ithin the rangę of 0.15 and 2.5 /. (2, 3,4. 5).

Forthcoming field tests

• Field tests on rough terrain: In the futurę, the algorithm will be tested over different types of terrain and gcomctrics. and also it will be madę robust to shadows.

References

11J G. Martinez, "Intensity-Dilference Based Monocular Visual Odometry for Planetary Rovers” in New Devel-opment in Robol Vision. vol. 23 of the scries Cognitive Systems Monographs. Berlin. Heidelberg: Springer Verlag, 2014, ch. 10. pp. 181-198.

[2) A. Howard. "Reał-time Stereo Visual Odometry for Autonomous Ground Vehicles". in IEEE/RSJIm. Conf. on Intelligent Robois and Systems. Nice. France. 2008 Scpt. 22-26. pp. 3946-3952.

|3] M. Mai monę, Y. Cheng, L. Matthies. *Two Years of Visual Odometry on the Mars Hxploration Rovers”, J. of Field Robotics, vol. 24, no. 3, pp. 169-186, Mar. 2007.

[4] D. Nistcr. O. Naroditsky. J. Bergen. "Visual Odometry' for Ground Vchiclc Applications". J. of Field Robotics, vol. 23. no. 1, pp. 3-20. Jan. 2006.

[5] P. Corke, D. Strelow, S. Singh. "Omnidirectional Visual Odometry for a Planetary Rover”. in IEEE Int. Conf. on Intelligent Robois and Systems. Scndai. Japan. 2004 28 Scpt.-2 Ocł.. pp. 4007—4012.

Acknowledgements

This work was supported by the University of Costa Rica. Thanks to Reg Willson from the NASA Jet Propulsion

Laboratory for kindly delivering the implcmentation of Tsai*s coplanar calibration algorithm. which was uscd in

the experiments. Thanks also to Esteban Mora for helping transport the equipment to and from the test localions

and for helping collect the data.

UNIVERSICWD K COSTARICA

www.UCr.ac.cr

IAPR MVA-2017, May 8-12. 2017, Nagoya, Japan

Wyszukiwarka

Podobne podstrony:

• Video and Image Processing Blockset- biblioteka przeznaczona do analizy obrazów statycznych oraz o

Course: Computer Science

00216 ?e7b2021092421cf45af4c94abbb03e 218 Baxley where the a, ’s are NID(0, a]) representing random

img133 przetwarzanie obrazu rozpoznawanie obrazu (ang. image processing)

636 UN DEBAT : LES MENTAUTES COLLECTIVES 6VILLAGE MENTALITY AND WRITTEN CULTURE cAtAlina velcul

Liczba cytowan: 2 Cytujący: 1. Horska E.; Oremus P. / 2008 / Processes and problem

Lab 4 Measurements of digital and analog signals. Research of analog measurement and executive chann

2 13 How to succeed in negotiation? The negotiations are a long-term process and do not begin with s

Synergy as a lalue Generator in Tourism 13 4. processes and innovations: - ąuality

The European Journal of Minerał Processing and Environmental Protection Vol.4, No.3, 1303-0868, 2004

F. Durao etat. / The European Journal of Minerał Processing and Environmental Protection Vol.4, No.3

F. Durao etat. / The European Journal of Minerał Processing and Environmental Protection Vol.4, No.3

F. Durao eta!. / The European Journal of Minerał Processing and Environmental Protection Vol.4, No.3

F. Durao etat. / The European Journal of Minerał Processing and Environmental Protection Vol.4, No.3

więcej podobnych podstron