ISSN 1054-6618, Pattern Recognition and Image Analysis, 2006, Vol. 16, No. 1, pp. 19–22. © Pleiades Publishing, Inc., 2006.

K

-Local Hyperplane Distance Nearest-Neighbor Algorithm

and Protein Fold Recognition

1

O. G. Okun

Machine Vision Group, Infotech Oulu and Department of Electrical and Information Engineering,

University of Oulu, P.O. Box 4500, FIN-90014, Oulu, Finland

e-mail: oleg@ee.oulu.fi

Abstract

—Two proteins may be structurally similar but not have significant sequence similarity. Protein fold

recognition is an approach usually applied in this case. It does not rely on sequence similarity and can be

achieved with relevant features extracted from protein sequences. In this paper, we experiment with the

K

-local

hyperplane distance nearest-neighbor algorithm [8] applied to the protein fold recognition and compare it with

other methods. Preliminary results obtained on a real-world dataset [3] demonstrate that this algorithm can out-

perform many other methods tested on the same dataset.

DOI:

10.1134/S1054661806010068

Received October 25, 2004

1

INTRODUCTION

A protein is an amino acid sequence. Classifying the

protein structure is a very important operation, since

characteristics of known proteins can be used to predict

the structure of new proteins. Protein-fold recognition

is an approach to protein structure discovery without

relying on sequence similarity. It is worthy of consider-

ation, since two proteins may be structurally similar but

have no significant sequence similarity. Given the rele-

vant features extracted from protein sequences,

machine-learning methods can be readily applied for

this task.

The protein fold is a common 3D pattern with the

same major secondary structure elements in the same

arrangement and with the same topological connec-

tions. Different folds can be grouped into four struc-

tural classes [5]:

α

,

β

,

α

/

β

, and

α

+

β

.

In this paper, we explore the

K

-local hyperplane dis-

tance nearest-neighbor (HKNN) algorithm when test-

ing it on the protein fold datasets used by Ding and

Dubchak [3]. HKNN is a modification of the conven-

tional

K

-nearest-neighbor (KNN), and it has already

shown promising results against support vector

machine (SVM) [8]. However, it has not yet been

applied to any bioinformatics tasks. The goal of this

paper, therefore, is to compare HKNN with other meth-

ods that have been employed with the above-mentioned

protein datasets.

1

This article was submitted by the author in English.

DATASETS

Two datasets derived from the SCOP (structural

classification of proteins) database [5] were used.

These datasets are available online

(http://crd.lbl.gov/~cding/protein), and their detailed

description can be found in [3]. Each dataset contains

the 27 most populated folds represented by seven or

more proteins and corresponding to four major struc-

tural classes. Table 1 shows the protein distribution

among these classes.

Six features were extracted from protein sequences:

amino acid composition (

C

), predicted secondary struc-

ture (

S

), hydrophobicity (

H

), normalized van der Waals

volume (

V

), polarity (

P

), and polarizability (

Z

). All

(except the first) features have dimensionality 21,

whereas the composition is of dimensionality 20. Thus,

in total, a feature vector combining six features has

125 dimensions. In addition, the protein length is

reported for each protein fold. When it is included, the

dimensionality of the composition rises to 21, while,

for other features, it becomes 22. As a result, in total, a

feature vector has a length of 131.

Dataset 1 consists of 313 protein folds having (for

each two proteins) no more than 35% of the sequence

identity for aligned subsequences longer than 80 resi-

APPLIED

PROBLEMS

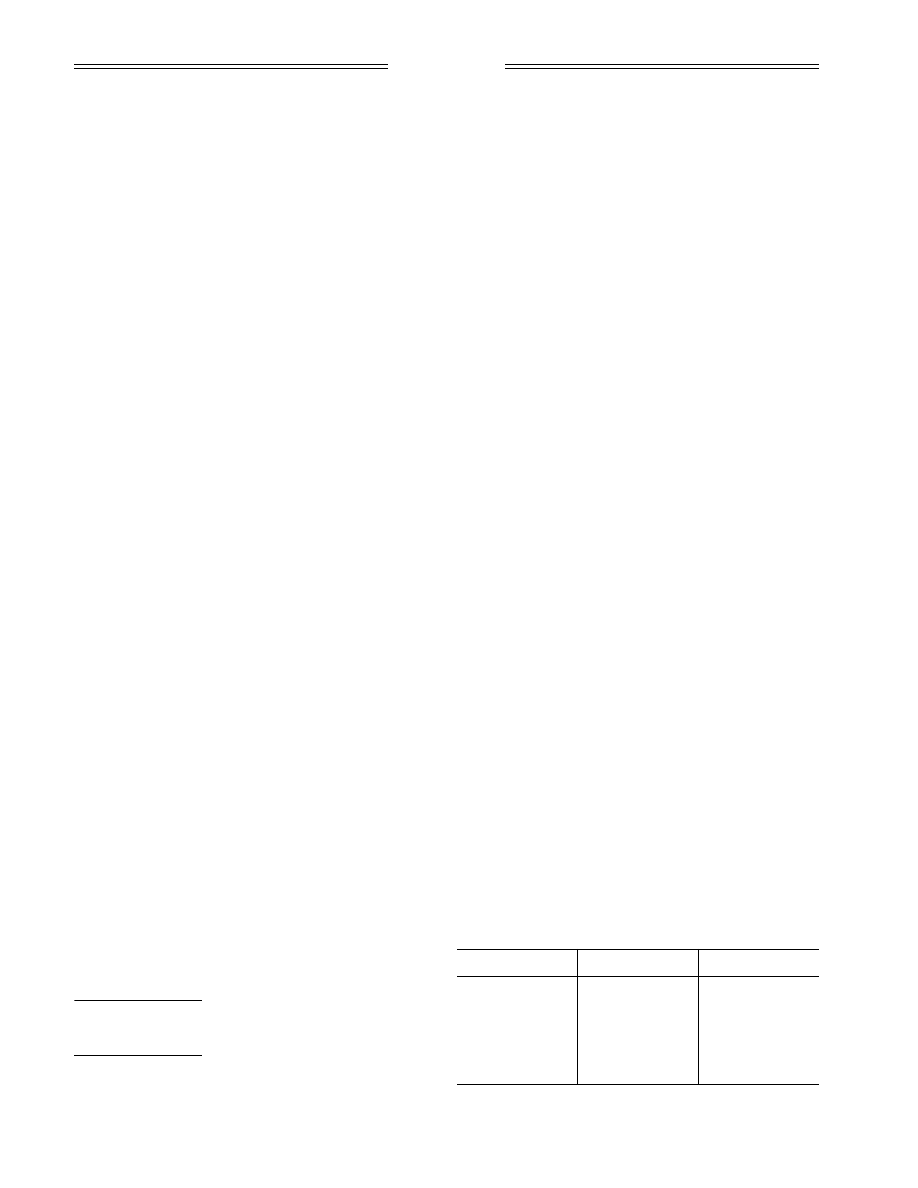

Table 1.

Distribution of the proteins among structural

classes between two datasets

Structural class

Dataset 1

Dataset 2

α

55

61

β

109

117

α

/

β

115

145

α

+

β

34

62

Total

313

385

20

PATTERN RECOGNITION AND IMAGE ANALYSIS

Vol. 16

No. 1

2006

OKUN

dues. Dataset 2 is composed of 385 protein folds having

less than 40% identity with each other and less than

35% identity with the proteins of Dataset 1.

PREVIOUS WORK

All approaches briefly analyzed below use the

datasets described in the previous section. Unless oth-

erwise stated, a 125-dimensional feature vector is

assumed for each protein fold. Since 27 protein folds

form four structural classes, a two-level recognition can

be done. At level 1, the protein to be recognized is

assigned to one of the four classes, while at level 2, it is

classified as one of the 27 folds. Recognition can

include either level or both levels. In the latter case,

classification can be hierarchical, i.e., classifiers at level

2 employ the outputs of level 1, which means that they

are not trained to predict all folds, but only those

belonging to a certain structural class.

RECOGNITION OF STRUCTURAL CLASSES

OF PROTEINS

Shi and Suganthan [7] applied feature selection and

scaling, based on mutual information, prior to KNN

and SVM. Their results indicate that feature selection is

beneficial for both methods, while feature scaling (nor-

malization) boosts the performance of KNN but is

rather useless for SVM.

RECOGNITION OF PROTEIN FOLDS

Ding and Dubchak [3] employed ensembles of both

three-layer feed-forward neural networks (trained with

the conjugate gradient method) and SVMs (one-versus-

all, unique one-versus-all, and one-versus-one methods

for building multiclass SVMs). Each ensemble con-

sisted of many two-class classifiers. The one-versus-

one method led to the best accuracy.

Bologna and Appel [1] used a 131-dimensional fea-

ture vector and an ensemble of four-layer discretized

interpretable multilayer perceptrons (DIMLPs),

where each network learns all protein folds simulta-

neously. Bagging and arcing combined the outputs of

the DIMLPs.

RECOGNITION

OF BOTH CLASSES AND FOLDS

Chung

et al.

[2] selected neural networks and SVMs

as the basic building blocks of the two-level classifica-

tion. In contrary to [3], each neural network or SVM is

a multiclass classifier, so that the number of classifiers

is greatly reduced. Common neural models with a sin-

gle hidden layer were used: MLP, a radial basis func-

tion network (RBFN), and a general regression neural

network (GRNN).

Huang

et al.

[4] exploited a similar approach by uti-

lizing gated neural networks. Gating is used for online

feature selection in order to reduce the number of fea-

tures fed to a classifier. First, the original data are used

to train the gating network. At the end of the training,

the gate function values for each feature indicate

whether a particular feature is relevant by comparing

them to a threshold. Only the relevant features are then

used to train a two-level classifier, the neural networks

of which at each level are trained independently of each

other.

Pal and Chakraborty [6] performed independent

classification at each level, i.e., they did not utilize a

hierarchical classifier. They trained the MLPs and

RBFNs with new features (400 in number) based on the

hydrophobicity of the amino acids.

HKNN

HKNN aims at competing with SVM by modifying

the standard KNN. Unlike SVM, which builds a (non-

linear) decision surface, separating different classes of

the data, in a high-dimensional (often infinite) feature

space, HKNN tries to find this surface directly in input

space. If one thinks of each class as a low-dimensional

manifold embedded in a high-dimensional space, it is

quite reasonable to assume that this manifold is locally

linear. Since the data are usually sparse, they leave

“holes” in the manifold. These “holes” introduce arti-

facts in the decision surface generated by the conven-

tional KNN, thus negatively affecting the generaliza-

tion ability of this method. To remedy this deficiency,

the idea of HKNN is to project the missing points based

on a local linear approximation of the manifold of each

class.

To reach its objective, HKNN computes the dis-

tances of each test point

x

to

L

local hyperplanes, where

L

is the number of different classes. The

l

th hyperplane

is composed of

K

nearest-neighbors of

x

in the training

set, belonging to the

l

th class. A test point

x

is associ-

ated with the class whose hyperplane is closest to

x

. To

determine the distance of

x

to hyperplane, a small linear

system of equations has to be solved (see [8] for

details).

HKNN has two parameters,

K

and

λ

(regulariza-

tion), to be pretuned.

EXPERIMENTS

In experiments, classifiers use Dataset 1 for training

and Dataset 2 (independent test set) for testing. We

scale the values of each dimension in the feature vec-

tors to have zero mean and unit variance according to

the suggestion about the normalization given in [7].

We start from level 1 of recognition, i.e., recognition

of the four structural classes. Table 2 represents the

results obtained with different methods. The 125-

dimensional feature vector associated with each protein

was used as input to classifiers. For HKNN, the optimal

values for

K

and

λ

were 7 and 8, respectively. It turned

PATTERN RECOGNITION AND IMAGE ANALYSIS

Vol. 16

No. 1

2006

K

-LOCAL HYPERPLANE DISTANCE NEAREST-NEIGHBOR ALGORITHM

21

out that HKNN outperformed all other methods. At the

same time, unlike the neural models, it did not need to

tune many parameters.

It was remarked in [3] that it is quite easy to achieve

an accuracy over 70% at level 1 by using simple fea-

tures, and all the classifiers reached this objective.

However, recognizing 27 folds is a more formidable

task. First, we provide (in Table 3) the results for single-

stage (nonhierarchical) classifiers. Again, the

125-dimensional feature vector was associated with

each protein fold, except DIMLPs [1] using the 131-

dimensional vector. HKNN used both 125- and 131-

dimensional vectors, and the accuracy was the same in

both cases as given in Table 3. For the RBFN in [6],

400-dimensional vectors were used.

Two rows for SVM/RBFN in Table 3 correspond to

results borrowed from different papers. In each case,

particular details of SVM (kernel and its parameters)

are not reported, except the fact that an ensemble of

one-versus-one SVMs was used in [3]. Parameters of

HKNN remained the same as above. In this test, the

maximal possible value for

K

is 7, since many folds

have only seven representatives in Dataset 1. It is seen

that HKNN again outperformed almost all competitors,

except DIMLP-B (bagged DIMLP) and DIMLP-A

(arced DIMLP). In the latter case, the ensemble of NNs

led to a higher accuracy. In contrast, the results

achieved with HKNN were obtained with a single clas-

sifier. When a single DIMLP was used in [1], the accu-

racy was only 38.2%. In addition, DIMLPs required

many parameters to be tuned, while HKNN, in fact, has

only one parameter,

λ

, since another parameter,

K

, can

be always set to min(

N

i

),

i

= 1, …,

L

, where min is the

minimum function,

N

i

is the number of representatives

of the

i

th fold in the training set, and

L

is the total num-

ber of folds.

Next, we gather together results of hierarchical

(two-level) classification in Table 4, where the final

accuracy is only reported using the 125-dimensional

feature vectors.

Comparing these results with 57.4% for HKNN, one

can observe that the hierarchical scheme was neverthe-

less unable to outperform a single classifier.

CONCLUSIONS

In this paper, we investigated the

K

-local hyperplane

distance nearest-neighbor algorithm applied for pro-

tein-fold recognition and tested it on a real-world

dataset from [3]. The obtained results are very encour-

aging, since, like the conventional KNN, HKNN does

not need training, and unlike the neural models and

even SVMs, it has fewer adjustable parameters, which,

in our case, were reduced just to one (

λ

). Being a sin-

gle-stage classifier, HKNN demonstrated high accuracy

compared to

ensembles

of SVMs and neural networks.

REFERENCES

1. G. Bologna and R. D. Appel, “A Comparison Study on

Protein Fold Recognition,”

Proceedings of the 9th Inter-

national Conference on Neural Information Processing,

Singapore, 2002

, pp. 2492–2496.

2. I.-F. Chung, C.-D. Huang, Y.-H. Shen, and C.-T. Lin,

“Recognition of Structure Classification of Protein Fold-

ing by NN and SVM Hierarchical Learning Architec-

ture,” in

Lecture Notes in Computer Science (Artificial

Neural Networks and Neural Information Processing—

ICANN/ICONIP 2003, Istanbul, Turkey)

, Ed. by

O. Kaynak, E. Alpaydin, E. Oja, and L. Xu (Springer,

Berlin, 2003), Vol. 2714, pp. 1159–1167.

3. C. H. Q. Ding and I. Dubchak, “Multiclass Protein Fold

Recognition Using Support Vector Machines and Neural

Networks,” Bioinformatics

17

(4), 349–358 (2001).

4. C.-D. Huang, I.-F. Chung, N. R. Pal, and C.-T. Lin,

“Machine Learning for Multiclass Protein Fold Classifi-

cation Based on Neural Networks with Feature Gating,”

in

Lecture Notes in Computer Science (Artificial Neural

Networks and Neural Information Processing—

ICANN/ICONIP 2003, Istanbul, Turkey),

Ed. by

O. Kaynak, E. Alpaydin, E. Oja, and L. Xu (Springer,

Berlin, 2003), Vol. 2714, pp. 1168–1175.

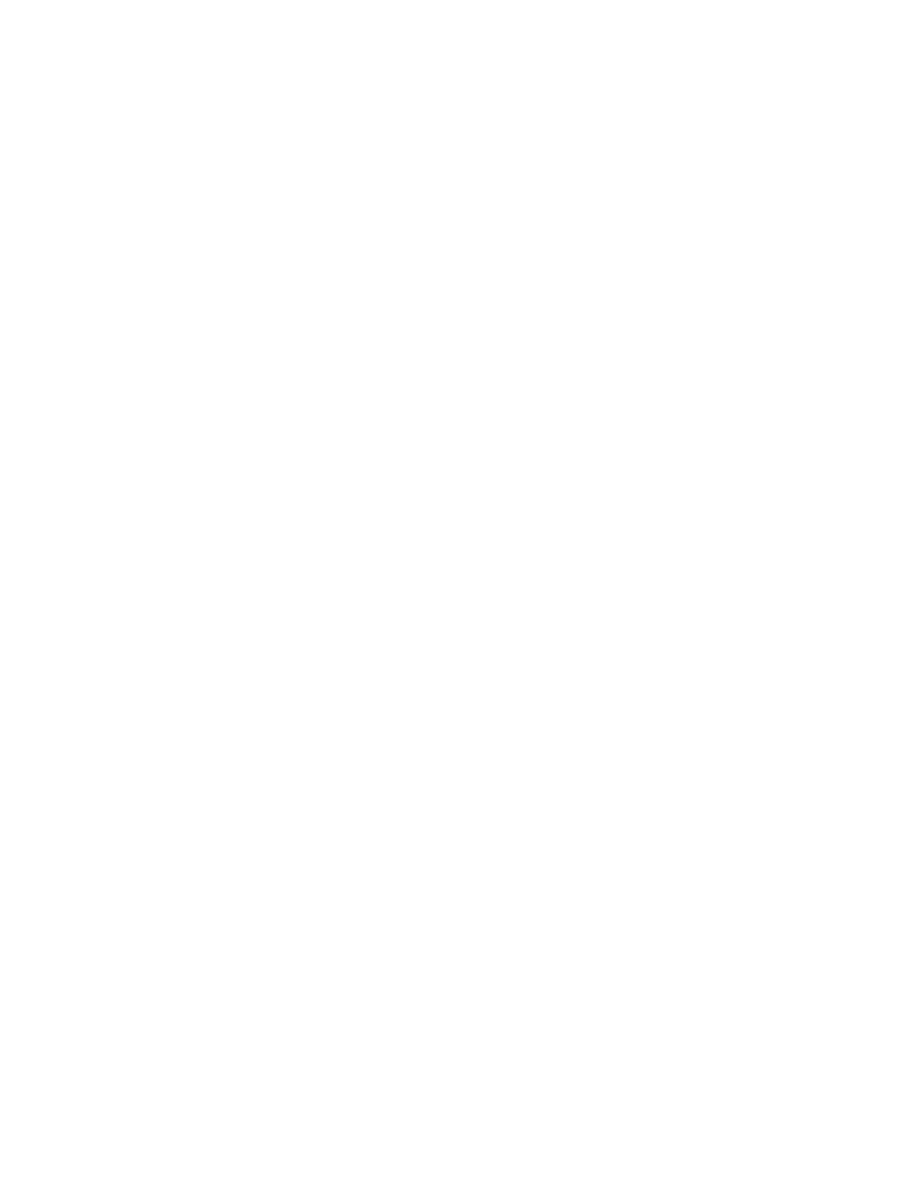

Table 2.

Best accuracy (in percent) for level 1 of protein rec-

ognition

[7]

[4]

[6]

our

SVM

KNN

gated

RBFN

MLP

RBFN

HKNN

76.9

71.4

81.6

80.5

81.8

82.6

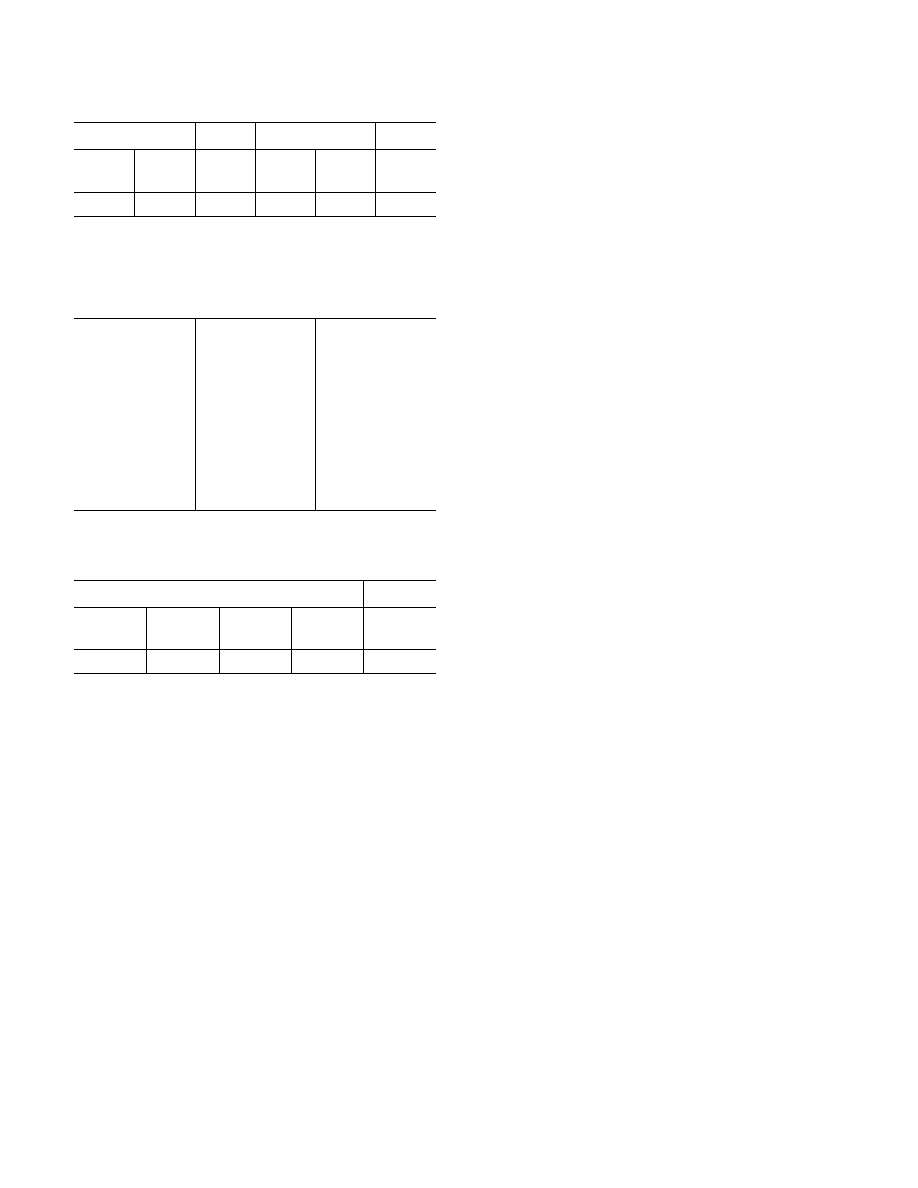

Table 3.

Accuracy (in percent) for level 2 of protein recog-

nition when a single-stage (nonhierarchical) classifier was

utilized to label an unknown protein fold as belonging to one

of the 27 folds

[1]

DIMLP-B

61.2

DIMLP-A

59.1

[2]

MLP

48.8

GRNN

44.2

RBFN

49.4

SVM

51.4

[6]

RBFN

51.2

[3]

SVM

53.9

This work

HKNN

57.4

Table 4.

Accuracy (in percent) for hierarchical protein rec-

ognition

[2]

[4]

MLP

RBFN

GRNN

SVM

gated

RBFN

44.7

56.4

45.2

53.8

56.4

22

PATTERN RECOGNITION AND IMAGE ANALYSIS

Vol. 16

No. 1

2006

OKUN

5. L. Lo Conte, B. Ailey, T. J. P. Hubbard,

et al.

, “SCOP: a

Structural Classification of Proteins Database,” Nucleic

Acids Res.

28

(1), 257–259 (2000).

6. N. R. Pal and D. Chakraborty, “Some New Features for

Protein Fold Recognition,” in

Lecture Notes in Computer

Science (Artificial Neural Networks and Neural Infor-

mation Processing—ICANN/ICONIP 2003, Istanbul,

Turkey)

, Ed. by O. Kaynak, E. Alpaydin, E. Oja, and

L. Xu (Springer, Berlin, 2003), Vol. 2714, pp. 1176–

1183.

7. S. Y. M. Shi and P. N. Suganthan, “Feature Analysis and

Classification of Protein Secondary Structure Data,” in

Lecture Notes in Computer Science (Artificial Neural

Networks and Neural Information Processing—

ICANN/ICONIP 2003, Istanbul, Turkey)

, Ed. by

O. Kaynak, E. Alpaydin, E. Oja, and L. Xu (Springer,

Berlin, 2003), Vol. 2714, pp. 1151–1158.

8. P. Vincent and Y. Bengio, “

K

-Local Hyperplane and

Convex Distance Nearest-Neighbor Algorithms,” in

Advances in Neural Information Processing Systems

,

Ed. by T. G. Dietterich, S. Becker, and Z. Ghahramani

(MIT, Cambridge, MA, 2002), Vol. 14, pp. 985–992.

Wyszukiwarka

Podobne podstrony:

art 10 1007 s00482 013 1385 z

535 0a56c Art 10 orto 04 08 czamara

art 10 1007 s11096 013 9846 0

Nielaty, ART 10 UPN, Postanowienie z dnia 25 listopada 2010 r

art 10 1007 s11427 012 4407 7

art 10 1617 s11527 006 9205 x

art 10 1007 s00044 011 9581 9 i Nieznany (2)

art. 10 konkordatu, lokal

art 10 1007 BF02980046 id 69338 Nieznany (2)

Wykład, art 10, Wizje końca stulecia

art 10 1007 BF02853186 id 69336 Nieznany

Prawo wekslowe, ART 10 PR. WEKSL, 1970

kk, ART 10 KK, Wyrok z dnia 29 września 2009 r

art 10 1007 s00482 013 1385 z

535 0a56c Art 10 orto 04 08 czamara

Prawo upadłościowe Art 10 Komentarz 1

Prawo upadłościowe Art 10 Komentarz 2

więcej podobnych podstron